ISO/IEC/IEEE DIS 29119-4

(Main)Software and systems engineering -- Software testing

Software and systems engineering -- Software testing

Ingénierie du logiciel et des systèmes -- Essais du logiciel

General Information

RELATIONS

Standards Content (sample)

DRAFT INTERNATIONAL STANDARD

ISO/IEC/IEEE/DIS 29119-4

ISO/IEC JTC 1/SC 7 Secretariat: BIS

Voting begins on: Voting terminates on:

2020-07-10 2020-10-02

Software and systems engineering — Software testing —

Part 4:

Test techniques

Ingénierie du logiciel et des systèmes — Essais du logiciel —

Partie 4: Techniques d'essai

ICS: 35.080

THIS DOCUMENT IS A DRAFT CIRCULATED

FOR COMMENT AND APPROVAL. IT IS

THEREFORE SUBJECT TO CHANGE AND MAY

NOT BE REFERRED TO AS AN INTERNATIONAL

STANDARD UNTIL PUBLISHED AS SUCH.

IN ADDITION TO THEIR EVALUATION AS

BEING ACCEPTABLE FOR INDUSTRIAL,

This document is circulated as received from the committee secretariat.

TECHNOLOGICAL, COMMERCIAL AND

USER PURPOSES, DRAFT INTERNATIONAL

STANDARDS MAY ON OCCASION HAVE TO

BE CONSIDERED IN THE LIGHT OF THEIR

POTENTIAL TO BECOME STANDARDS TO

WHICH REFERENCE MAY BE MADE IN

Reference number

NATIONAL REGULATIONS.

ISO/IEC/IEEE/DIS 29119-4:2020(E)

RECIPIENTS OF THIS DRAFT ARE INVITED

TO SUBMIT, WITH THEIR COMMENTS,

NOTIFICATION OF ANY RELEVANT PATENT

ISO/IEC 2020

RIGHTS OF WHICH THEY ARE AWARE AND TO

PROVIDE SUPPORTING DOCUMENTATION. IEEE 2020

---------------------- Page: 1 ----------------------

ISO/IEC/IEEE/DIS 29119-4:2020(E)

COPYRIGHT PROTECTED DOCUMENT

© ISO/IEC 2020

© IEEE 2020

All rights reserved. Unless otherwise specified, or required in the context of its implementation, no part of this publication may

be reproduced or utilized otherwise in any form or by any means, electronic or mechanical, including photocopying, or posting

on the internet or an intranet, without prior written permission. Permission can be requested from either ISO or IEEE at the

respective address below or ISO’s member body in the country of the requester.ISO copyright office Institute of Electrical and Electronics Engineers, Inc

CP 401 • Ch. de Blandonnet 8 3 Park Avenue, New York

CH-1214 Vernier, Geneva NY 10016-5997, USA

Phone: +41 22 749 01 11

Fax: +41 22 749 09 47

Email: copyright@iso.org Email: stds.ipr@ieee.org

Website: www.iso.org Website: www.ieee.org

Published in Switzerland

© ISO/IEC 2020 – All rights reserved

ii © IEEE 2020 – All rights reserved

---------------------- Page: 2 ----------------------

ISO/IEC/IEEE/DIS 29119-4:2020(E)

Contents Page

Foreword ..........................................................................................................................................................................................................................................v

Introduction ................................................................................................................................................................................................................................vi

1 Scope ................................................................................................................................................................................................................................. 1

2 Normative References ..................................................................................................................................................................................... 1

3 Terms and Definitions .................................................................................................................................................................................... 1

4 Conformance ............................................................................................................................................................................................................. 3

4.1 Intended Usage ....................................................................................................................................................................................... 3

4.2 Full Conformance .................................................................................................................................................................................. 4

4.3 Tailored Conformance ...................................................................................................................................................................... 4

5 Test Design Techniques ................................................................................................................................................................................. 4

5.1 Overview ...................................................................................................................................................................................................... 4

5.2 Specification-Based Test Design Techniques ................................................................................................................ 6

5.2.1 Equivalence Partitioning ........................................................................................................................................... 6

5.2.2 Classification Tree Method ...................................................................................................................................... 8

5.2.3 Boundary Value Analysis .......................................................................................................................................... 8

5.2.4 Syntax Testing .................................................................................................................................................................10

5.2.5 Combinatorial Test Design Techniques .....................................................................................................11

5.2.6 Decision Table Testing..............................................................................................................................................14

5.2.7 Cause-Effect Graphing ..............................................................................................................................................14

5.2.8 State Transition Testing ..........................................................................................................................................15

5.2.9 Scenario Testing ............................................................................................................................................................16

5.2.10 Random Testing .............................................................................................................................................................17

5.2.11 Metamorphic Testing ................................................................................................................................................17

5.2.12 Requirements-Based Testing .............................................................................................................................18

5.3 Structure-Based Test Design Techniques ......................................................................................................................19

5.3.1 Statement Testing ........................................................................................................................................................19

5.3.2 Branch Testing ................................................................................................................................................................19

5.3.3 Decision Testing ...................................................................... .......................................................................................20

5.3.4 Branch Condition Testing ......................................................................................................................................20

5.3.5 Branch Condition Combination Testing ....................................................................................................21

5.3.6 Modified Condition/Decision Coverage (MCDC) Testing ...........................................................22

5.3.7 Data Flow Testing .........................................................................................................................................................22

5.4 Experience-Based Test Design Techniques .................................................................................................................25

5.4.1 Error Guessing ................................................................................................................................................................25

6 Test Coverage Measurement .................................................................................................................................................................25

6.1 Overview ...................................................................................................................................................................................................25

6.2 Test Measurement for Specification-Based Test Design Techniques ....................................................26

6.2.1 Equivalence Partition Coverage .......................................................................................................................26

6.2.2 Classification Tree Method Coverage ..........................................................................................................26

6.2.3 Boundary Value Analysis Coverage ...............................................................................................................26

6.2.4 Syntax Testing Coverage .........................................................................................................................................27

6.2.5 Combinatorial Test Design Technique Coverage ...............................................................................27

6.2.6 Decision Table Testing Coverage .....................................................................................................................27

6.2.7 Cause-Effect Graphing Coverage .....................................................................................................................28

6.2.8 State Transition Testing Coverage .................................................................................................................28

6.2.9 Scenario Testing Coverage ....................................................................................................................................28

6.2.10 Random Testing Coverage ....................................................................................................................................28

6.2.11 Metamorphic Testing Coverage ........................................................................................................................29

6.2.12 Requirements-Based Testing Coverage .....................................................................................................29

6.3 Test Measurement for Structure-Based Test Design Techniques .............................................................29

6.3.1 Statement Testing Coverage ................................................................................................................................29

6.3.2 Branch Testing Coverage ........................................................................................................................................29

© ISO/IEC 2020 – All rights reserved© IEEE 2020 – All rights reserved iii

---------------------- Page: 3 ----------------------

ISO/IEC/IEEE/DIS 29119-4:2020(E)

6.3.3 Decision Testing Coverage ....................................................................................................................................29

6.3.4 Branch Condition Testing Coverage .............................................................................................................29

6.3.5 Branch Condition Combination Testing Coverage ...........................................................................30

6.3.6 Modified Condition Decision (MCDC) Testing Coverage ............................................................30

6.3.7 Data Flow Testing Coverage ................................................................................................................................30

6.4 Test Measurement for Experience-Based Testing Design Techniques.................................................31

6.4.1 Error Guessing Coverage .......................................................................................................................................31

Annex A (informative) Testing Quality Characteristics ..................................................................................................................32

Annex B (informative) Guidelines and Examples for the Application of Specification-Based

Test Design Techniques ..............................................................................................................................................................................45

Annex C (informative) Guidelines and Examples for the Application of Structure-Based Test

Design Techniques ...........................................................................................................................................................................................98

Annex D (informative) Guidelines and Examples for the Application of Experience-Based

Test Design Techniques ..........................................................................................................................................................................118

Annex E (informative) Guidelines and Examples for the Application of Grey-Box Test Design

Techniques ...........................................................................................................................................................................................................121

Annex F (informative) Test Design Technique Effectiveness ................................................................................................124

Annex G (informative) ISO/IEC/IEEE 29119-4 and BS 7925-2 Test Design Technique Alignment 126

Annex H (informative) Test Models.................................................................................................................................................................128

Bibliography .........................................................................................................................................................................................................................129

© ISO/IEC 2020 – All rights reservediv © IEEE 2020 – All rights reserved

---------------------- Page: 4 ----------------------

ISO/IEC/IEEE/DIS 29119-4:2020(E)

Foreword

ISO (the International Organization for Standardization) and IEC (the International Electrotechnical

Commission) form the specialized system for worldwide standardization. National bodies that are

members of ISO or IEC participate in the development of International Standards through technical

committees established by the respective organization to deal with particular fields of technical

activity. ISO and IEC technical committees collaborate in fields of mutual interest. Other international

organizations, governmental and non-governmental, in liaison with ISO and IEC, also take part in the

work. In the field of information technology, ISO and IEC have established a joint technical committee,

ISO/IEC JTC 1.The procedures used to develop this document and those intended for its further maintenance are

described in the ISO/IEC Directives, Part 1. In particular the different approval criteria needed for

the different types of document should be noted. This document was drafted in accordance with the

editorial rules of the ISO/IEC Directives, Part 2 (see www .iso .org/ directives).

Attention is drawn to the possibility that some of the elements of this document may be the subject

of patent rights. ISO and IEC shall not be held responsible for identifying any or all such patent

rights. Details of any patent rights identified during the development of the document will be in the

Introduction and/or on the ISO list of patent declarations received (see www .iso .org/ patents).

Any trade name used in this document is information given for the convenience of users and does not

constitute an endorsement.For an explanation on the meaning of ISO specific terms and expressions related to conformity

assessment, as well as information about ISO's adherence to the WTO principles in the Technical

Barriers to Trade (TBT) see the following URL: Foreword - Supplementary information

The committee responsible for this document is ISO/IEC JTC 1, Information technology, SC 7, Software

and Systems Engineering.ISO/IEC/IEEE 29119 consists of the following standards, under the general title Software and Systems

Engineering — Software Testing:— Part 1: Concepts and definitions

— Part 2: Test processes

— Part 3: Test documentation

— Part 4: Test techniques

— Part 5: Keyword-driven testing

This is the second version of this standard. The main difference between the first version and the

second version is that the test techniques in this standard are defined using a new form of the Test

Design and Implementation Process from ISO/IEC/IEEE 29119-2. In the first version, this process

was based on the use of test conditions. Feedback on use of the standard highlighted a problem with

users’ understanding of test conditions and their use for deriving test cases. This second version has

replaced the use of test conditions with test models. Annex H provides more detail on this change and

the following Introduction describes the new process.© ISO/IEC 2020 – All rights reserved

© IEEE 2020 – All rights reserved v

---------------------- Page: 5 ----------------------

ISO/IEC/IEEE/DIS 29119-4:2020(E)

Introduction

The purpose of this document is to provide an International Standard that defines software test design

techniques (also known as test case design techniques or test methods) that can be used within the

test design and implementation process that is defined in ISO/IEC/IEEE 29119-2. This document does

not describe a process for test design and implementation; instead, it describes a set of techniques that

can be used within the test design and implementation process defined in ISO/IEC/IEEE 29119-2. The

intent is to describe a series of techniques that have wide acceptance in the software testing industry.

This standard is originally based on the British standard, BS 7925-2.The test design techniques presented in this document can be used to derive test cases that, when

executed, generate evidence that test item requirements have been met or that defects are present in

a test item (i.e. that requirements have not been met). Risk-based testing could be used to determine

the set of techniques that are applicable in specific situations (risk-based testing is covered in

ISO/IEC/IEEE 29119-1 and ISO/IEC/IEEE 29119-2).NOTE A “test item” is a work product that is being tested (see ISO/IEC/IEEE 29119-1).

EXAMPLE 1 “Test items” include systems, software items, objects, classes, requirements documents, design

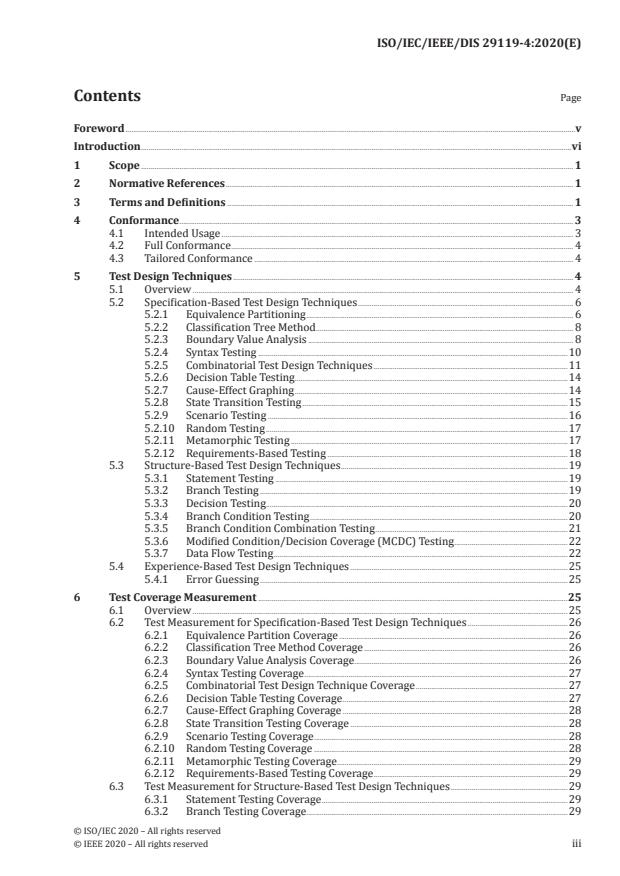

specifications, and user guides.Each technique follows the test design and implementation process that is defined in

ISO/IEC/IEEE 29119-2 and shown in Figure 1.Of the activities in this process, this standard provides guidance on how to implement the following

activities in detail for each technique that is described:— Create Test Model (TD1);

— Identify Test Coverage Items (TD2);

— Derive Test Cases (TD3).

A test model represents testable aspects of a test item, such as a function, transaction, feature, quality

attribute, or structural element identified as a basis for testing. The test model reflects the required

test completion criterion in the test strategy.EXAMPLE 2 If a test completion criterion for state transition testing was identified that required coverage of

all states then the test model would show the states the test item can be in.Test coverage items are attributes of the test model that can be covered during testing. A single test

model will typically be the basis for several test coverage items.A test case is a set of preconditions, inputs (including actions, where applicable), and expected results,

developed to determine whether or not the covered part of the test item has been implemented

correctly.Specific (normative) guidance on how to implement the Create Test Procedures activity (TD4) in the

test design & implementation process of ISO/IEC/IEEE 29119-2 is not included in Clauses 5 or 6 of this

document because the process is the same for all techniques.© ISO/IEC 2020 – All rights reserved

vi © IEEE 2020 – All rights reserved

---------------------- Page: 6 ----------------------

ISO/IEC/IEEE/DIS 29119-4:2020(E)

Figure 1 — ISO/IEC/IEEE 29119-2 Test Design and Implementation Process

ISO/IEC 25010 defines eight quality characteristics (including functionality) that can be used to

identify types of testing that may be applicable for testing a specific test item. Annex A provides

example mappings of test design techniques that apply to testing quality characteristics defined in

ISO/IEC 25010.Experience-based testing practices like exploratory testing and other test practices such as model-

based testing are not defined in this document because this document only describes techniques for

designing test cases. Test practices such as exploratory testing, which could use the test techniques

defined in this document, are described in ISO/IEC/IEEE 29119-1.Templates and examples of test documentation that are produced during the testing process are defined

in ISO/IEC/IEEE 29119-3 Test Documentation. The test techniques in this document do not describe

how to document test cases (e.g. they do not include information or guidance on assigning unique

identifiers, test case descriptions, priorities, traceability or pre-conditions to test cases). Information

on how to document test cases can be found in ISO/IEC/IEEE 29119-3.This document aims to provide stakeholders with the ability to design test cases for software testing in

any organization.© ISO/IEC 2020 – All rights reserved

© IEEE 2020 – All rights reserved vii

---------------------- Page: 7 ----------------------

DRAFT INTERNATIONAL STANDARD ISO/IEC/IEEE/DIS 29119-4:2020(E)

Software and systems engineering — Software testing —

Part 4:

Test techniques

1 Scope

This document defines test design techniques that can be used during the test design and implementation

process that is defined in ISO/IEC/IEEE 29119-2.Each technique follows the test design and implementation process that is defined in

ISO/IEC/IEEE 29119-2 and shown in Figure 1. This document is intended for, but not limited to, testers,

test managers, and developers, particularly those responsible for managing and implementing software

testing.2 Normative References

The following document, in whole or in part, is normatively referenced in this document and is

indispensable for its application. The latest edition of the referenced document (including any

amendments) applies.ISO/IEC/IEEE 29119-2, Software and systems engineering — Software testing — Part 2: Test processes

NOTE Other International Standards useful for the implementation and interpretation of this document are

listed in the bibliography.3 Terms and Definitions

For the purposes of this document, the terms and definitions given in ISO/IEC/IEEE 24765 and the

following apply.NOTE Use of the terminology in this document is for ease of reference and is not mandatory for conformance

with this document. The following terms and definitions are provided to assist with the understanding and

readability of this document. Only terms critical to the understanding of this document are included. This clause

is not intended to provide a complete list of testing terms. The Systems and Software Engineering Vocabulary

ISO/IEC/IEEE 24765 can be referenced for terms not defined in this clause. This source is available at the

following web site: http:// www .computer .org/ sevocab. All terms defined in this clause are also intentionally

included in ISO/IEC/IEEE 29119-1, as that international standard includes all terms that are used in parts 1 to 4

of ISO/IEC/IEEE 29119.3.1

Backus-Naur Form

formal meta-language used for defining the syntax of a language in a textual format

3.2base choice

base value

input parameter value used in ‘base choice testing’ that is normally selected based on being a

representative or typical value for the parameter.3.3

c-use

computation data use

use of the value of a variable in any type of statement

© ISO/IEC 2020 – All rights reserved

© IEEE 2020 – All rights reserved 1

---------------------- Page: 8 ----------------------

ISO/IEC/IEEE/DIS 29119-4:2020(E)

3.4

condition

Boolean expression containing no Boolean operators

EXAMPLE “A < B” is a condition but “A and B” is not.

3.5

control flow

sequence in which operations are performed during the execution of a test item

3.6

control flow sub-path

sequence of executable statements within a test item

3.7

data definition

variable definition

statement where a variable is assigned a value. Also called variable definition

3.8

data definition c-use pair

data definition and subsequent computation data use, where the data use uses the value defined in the

data definition3.9

data definition p-use pair

data definition and subsequent predicate data use, where the data use uses the value defined in the

data definition3.10

data use

executable statement where the value of a variable is accessed

3.11

decision outcome

result of a decision that determines the branch to be executed

3.12

decision rule

combination of conditions (also known as causes) and actions (also known as effects) that produce a

specific outcome in decision table testing and cause-effect graphing3.13

definition-use pair

data definition-use pair

data definition and subsequent predicate or computational data use, where the data use uses the value

defined in the data definition3.14

entry point

point in a test item at which execution of the test item can begin

EXAMPLE An entry point is an executable statement within a test item that could be selected by an external

process as the starting point for one or more paths through the test item. It is most commonly the first executable

statement within the test item.3.15

executable statement

statement which, when compiled, is translated into object code, which will be executed procedurally

when the test item is running and may perform an action on program data© ISO/IEC 2020 – All rights reserved

2 © IEEE 2020 – All rights reserved

---------------------- Page: 9 ----------------------

ISO/IEC/IEEE/DIS 29119-4:2020(E)

3.16

exit point

last executable statement within a test item

EXAMPLE An exit point is a terminal point of a path through a test item, being an executable statement

within the test item which either terminates the test item, or returns control to an external process. This is most

commonly the last executable statement within the test item.3.17

p-use

predicate data use

data use associated with the decision outcome of the predicate portion of a decision statement

3.18P-V pair

parameter-value pair

combination of a test item parameter with a value assigned to that parameter, used as a test coverage

item in combinatorial test design techniques3.19

path

sequence of executable statements of a test item

3.20

predicate

logical expression which evaluates to TRUE or FALSE to direct the execution path in code

3.21sub-path

path that is part of a larger path

3.22

test model

representation of an aspect of the test item, which is in the focus of testing

EXAMPLE 1 Requirements statements, equivalence partitions, state transition diagram, use case description,

decision table, input syntax description, source code, control flow graph, parameters & values, classification tree,

natural language.EXAMPLE 2 The test model and the required test coverage are used to identify test coverage items.

EXAMPLE 3 A separate test model may be required for each different type of required test coverage included

in the test completion criteria.EXAMPLE 4 A test model can include one or more test conditions.

EXAMPLE 5 Test models are commonly used to support test design (e.g. they are used to support test design in

ISO/IEC/IEEE 29119-4, and they are used in model-based testing). Other types of models exist to support other

aspects of testing, such as test environment models, test maturity models and test architecture models.

4 Conformance4.1 Intended Usage

The requirements in this document are contained in Clauses 5 and 6. It is recognised that particular

projects or organizations will not need to use all of the techniques defined by this standard.

Implementation of ISO/IEC/IEEE 29119-2 involves using a risk-based approach to selecting a subset

of techniques suitable for a given project or organization. There are two ways that an organization or

individual can claim conformance to the provisions of this standard – full conformance and tailored

conformance.© ISO/IEC 2020 – All rights reserved

© IEEE 2020 – All rights reserved 3

---------------------- Page: 10 ----------------------

ISO/IEC/IEEE/DIS 29119-4:2020(E)

The organization shall assert whether it is claiming full or tailored conformance to this document.

4.2 Full ConformanceFull conformance is achieved by demonstrating that all of the requirements (i.e. ‘shall’ statements) of

the chosen (non-empty) set of techniques in Clause 5 and the corresponding test coverage measurement

approaches in Clause 6 have been satisfied.EXAMPLE An organization could choose to conform only to one technique, such as boundary

...

Questions, Comments and Discussion

Ask us and Technical Secretary will try to provide an answer. You can facilitate discussion about the standard in here.