ISO/IEC 14496-3:1999

(Main)Information technology — Coding of audio-visual objects — Part 3: Audio

Information technology — Coding of audio-visual objects — Part 3: Audio

Technologies de l'information — Codage des objets audiovisuels — Partie 3: Codage audio

General Information

Relations

Buy Standard

Standards Content (Sample)

� ISO/IEC ISO/IEC 14496-3:1999(E)

Contents for Subpart 5

5.1 Scope .8

5.1.1 Overview of section.8

5.1.1.1 Purpose.8

5.1.1.2 Introduction to major elements .8

5.2 Normative references .8

5.3 Definitions.8

5.4 Symbols and abbreviations .13

5.4.1 Mathematical operations.13

5.4.2 Description methods.13

5.4.2.1 Bitstream syntax .13

5.4.2.2 SAOL syntax.14

5.4.2.3 SASL Syntax.14

5.5 Bitstream syntax and semantics.14

5.5.1 Introduction to bitstream syntax.14

5.5.2 Bitstream syntax.14

5.6 Object types.19

5.7 Decoding process.20

5.7.1 Introduction.20

5.7.2 Decoder configuration header.20

5.7.3 Bitstream data and sound creation .20

5.7.3.1 Relationship with systems layer .20

5.7.3.2 Bitstream data elements.20

5.7.3.3 Scheduler semantics .21

5.7.4 Conformance.25

5.8 SAOL syntax and semantics.26

5.8.1 Relationship with bitstream syntax .26

5.8.2 Lexical elements .26

5.8.2.1 Concepts.26

5.8.2.2 Identifiers .27

5.8.2.3 Numbers.27

5.8.2.4 String constants.27

5.8.2.5 Comments.27

5.8.2.6 Whitespace .28

5.8.3 Variables and values .28

5.8.4 Orchestra.28

5.8.5 Global block .29

5.8.5.1 Syntactic form .29

5.8.5.2 Global parameter.29

Subpart 5 1

---------------------- Page: 1 ----------------------

ISO/IEC 14496-3:1999(E) � ISO/IEC

5.8.5.3 Global variable declaration .31

5.8.5.4 Route statement .32

5.8.5.5 Send statement.33

5.8.5.6 Sequence specification .34

5.8.6 Instrument definition . 36

5.8.6.1 Syntactic form .36

5.8.6.2 Instrument name .36

5.8.6.3 Parameter fields .36

5.8.6.4 Preset tag .36

5.8.6.5 Instrument variable declarations.37

5.8.6.6 Block of code statements.39

5.8.6.7 Expressions.46

5.8.6.8 Standard names .54

5.8.7 Opcode definition . 58

5.8.7.1 Syntactic Form .58

5.8.7.2 Rate tag .58

5.8.7.3 Opcode name.58

5.8.7.4 Formal parameter list.59

5.8.7.5 Opcode variable declarations.59

5.8.7.6 Opcode statement block .60

5.8.7.7 Opcode rate .60

5.8.8 Template declaration. 62

5.8.8.1 Syntactic form .62

5.8.8.2 Semantics .62

5.8.8.3 Template instrument definitions.62

5.8.9 Reserved words . 63

5.9 SAOL core opcode definitions and semantics . 64

5.9.1 Introduction. 64

5.9.2 Specialop type. 64

5.9.3 List of core opcodes. 65

5.9.4 Math functions . 66

5.9.4.1 Introduction .66

5.9.4.2 int .66

5.9.4.3 frac.66

5.9.4.4 dbamp.66

5.9.4.5 ampdb.66

5.9.4.6 abs .66

5.9.4.7 sgn .66

5.9.4.8 exp .66

5.9.4.9 log .67

5.9.4.10 sqrt .67

5.9.4.11 sin .67

5.9.4.12 cos .67

5.9.4.13 atan.67

5.9.4.14 pow.67

5.9.4.15 log10.68

5.9.4.16 asin .68

5.9.4.17 acos .68

5.9.4.18 ceil .68

5.9.4.19 floor .68

5.9.4.20 min.68

5.9.4.21 max .68

5.9.5 Pitch converters. 69

5.9.5.1 Introduction to pitch representations .69

5.9.5.2 gettune .69

5.9.5.3 settune.69

2 Subpart 5

---------------------- Page: 2 ----------------------

� ISO/IEC ISO/IEC 14496-3:1999(E)

5.9.5.4 octpch.70

5.9.5.5 pchoct.70

5.9.5.6 cpspch.70

5.9.5.7 pchcps.70

5.9.5.8 cpsoct.71

5.9.5.9 octcps.71

5.9.5.10 midipch .71

5.9.5.11 pchmidi .71

5.9.5.12 midioct .71

5.9.5.13 octmidi .72

5.9.5.14 midicps .72

5.9.5.15 cpsmidi .72

5.9.6 Table operations .72

5.9.6.1 ftlen.72

5.9.6.2 ftloop .72

5.9.6.3 ftloopend.73

5.9.6.4 ftsr.73

5.9.6.5 ftbasecps.73

5.9.6.6 ftsetloop .73

5.9.6.7 ftsetend .73

5.9.6.8 ftsetbase.73

5.9.6.9 ftsetsr .74

5.9.6.10 tableread .74

5.9.6.11 tablewrite .74

5.9.6.12 oscil.74

5.9.6.13 loscil.75

5.9.6.14 doscil.75

5.9.6.15 koscil.76

5.9.7 Signal generators .76

5.9.7.1 kline .76

5.9.7.2 aline .77

5.9.7.3 kexpon.77

5.9.7.4 aexpon.78

5.9.7.5 kphasor .78

5.9.7.6 aphasor .79

5.9.7.7 pluck.79

5.9.7.8 buzz .80

5.9.7.9 grain.80

5.9.8 Noise generators .81

5.9.8.1 Note on noise generators and pseudo-random sequences .81

5.9.8.2 irand.82

5.9.8.3 krand.82

5.9.8.4 arand.82

5.9.8.5 ilinrand .82

5.9.8.6 klinrand .82

5.9.8.7 alinrand .83

5.9.8.8 iexprand .83

5.9.8.9 kexprand .83

5.9.8.10 aexprand .83

5.9.8.11 kpoissonrand .83

5.9.8.12 apoissonrand .84

5.9.8.13 igaussrand.84

5.9.8.14 kgaussrand.85

5.9.8.15 agaussrand.85

5.9.9 Filters .85

5.9.9.1 port .85

5.9.9.2 hipass.85

5.9.9.3 lopass.86

5.9.9.4 bandpass.86

5.9.9.5 bandstop .86

5.9.9.6 biquad.87

Subpart 5 3

---------------------- Page: 3 ----------------------

ISO/IEC 14496-3:1999(E) � ISO/IEC

5.9.9.7 allpass .87

5.9.9.8 comb.87

5.9.9.9 fir.88

5.9.9.10 iir.88

5.9.9.11 firt .88

5.9.9.12 iirt.89

5.9.10 Spectral analysis. 89

5.9.10.1 fft.89

5.9.10.2 ifft.90

5.9.11 Gain control. 91

5.9.11.1 rms.91

5.9.11.2 gain.92

5.9.11.3 balance.92

5.9.11.4 compressor.93

5.9.12 Sample conversion. 95

5.9.12.1 decimate.95

5.9.12.2 upsamp .95

5.9.12.3 downsamp .96

5.9.12.4 samphold .96

5.9.12.5 sblock.96

5.9.13 Delays . 97

5.9.13.1 delay.97

5.9.13.2 delay1 .97

5.9.13.3 fracdelay .97

5.9.14 Effects . 98

5.9.14.1 reverb .98

5.9.14.2 chorus .99

5.9.14.3 flange.99

5.9.14.4 fx_speedc.99

5.9.14.5 speedt.99

5.9.15 Tempo functions. 100

5.9

...

�ISO/IEC ISO/IEC 14496-3:1999(E)

Contents for Subpart 4

4.1 Scope.5

4.1.1 Technical Overview.5

4.1.1.1 Encoder and Decoder Block Diagrams.5

4.1.1.2 Overview of the Encoder and Decoder Tools.8

4.2 Normative References .11

4.3 GA-specific definitions .11

4.4 Syntax.13

4.4.1 GA Specific Configuration.13

4.4.1.1 Program config element .14

4.4.2 GA Bitstream Payloads.15

4.4.2.1 Payloads for the audio object types AAC_main, AAC_SSR, AAC_LC and AAC_LTP .15

4.4.2.2 Payloads for the audio object type AAC_scalable.19

4.4.2.3 Payloads for the audio object type Twin_VQ .22

4.4.2.4 Subsidiary payloads .24

4.5 General information .29

4.5.1 Decoding of the GA specific configuration .29

4.5.1.1 GA_SpecificConfig.29

4.5.1.2 Program Config Element (PCE) .29

4.5.2 Decoding of the GA bitstream payloads.31

4.5.2.1 Top Level Payloads for the audio object types AAC_main, AAC_SSR, AAC_LC and AAC_LTP .31

4.5.2.2 Payloads for the audio object type AAC_scalable.36

4.5.2.3 Decoding of an individual_channel_stream (ICS) and ics_info .53

4.5.2.4 Payloads for the audio object type TwinVQ .58

4.5.2.5 Dynamic Range Control (DRC) .61

4.5.3 Buffer requirements .64

4.5.3.1 Minimum decoder input buffer.64

4.5.3.2 Bit reservoir .64

4.5.3.3 Maximum bit rate.65

4.5.4 Tables .65

4.5.5 Figures.71

4.6 GA-Tool Descriptions .72

Subpart 4 1

---------------------- Page: 1 ----------------------

ISO/IEC 14496-3:1999(E) �ISO/IEC

4.6.1 Quantization.72

4.6.1.1 Tool description.72

4.6.1.2 Definitions .72

4.6.1.3 Decoding process .72

4.6.2 Scalefactors .72

4.6.2.1 Tool description.72

4.6.2.2 Definitions .72

4.6.2.3 Decoding process .73

4.6.3 Noiseless coding .74

4.6.3.1 Tool description.74

4.6.3.2 Definitions .74

4.6.3.3 Decoding process .76

4.6.3.4 Tables .78

4.6.4 Interleaved vector quantization .79

4.6.4.1 Tool description.79

4.6.4.2 Definitions .79

4.6.4.3 Parameter settings .79

4.6.4.4 Decoding process .79

4.6.4.5 Diagrams .82

4.6.5 Frequency domain prediction .83

4.6.5.1 Tool description.83

4.6.5.2 Definitions .83

4.6.5.3 Decoding process .84

4.6.5.4 Diagrams .89

4.6.6 Long Term Prediction (LTP) .90

4.6.6.1 Tool description.90

4.6.6.2 Definitions .90

4.6.6.3 Decoding process .90

4.6.6.4 Integration of LTP with other GA tools .91

4.6.6.5 LTP in a scalable GA decoder.92

4.6.7 Joint Coding.92

4.6.7.1 M/S stereo.92

2 Subpart 4

---------------------- Page: 2 ----------------------

�ISO/IEC ISO/IEC 14496-3:1999(E)

4.6.7.2 Intensity Stereo (IS).93

4.6.7.3 Coupling channel .95

4.6.8 Temporal Noise Shaping (TNS).98

4.6.8.1 Tool description.98

4.6.8.2 Definitions.98

4.6.8.3 Decoding process .98

4.6.8.4 Maximum TNS order and bandwidth.100

4.6.8.5 TNS in the scalable coder.100

4.6.9 Spectrum normalization .102

4.6.9.1 Tool description.102

4.6.9.2 Definitions.102

4.6.9.3 Decoding process .103

4.6.9.4 Diagrams .109

4.6.9.5 Tables .110

4.6.10 Filterbank and block switching.111

4.6.10.1 Tool description.111

4.6.10.2 Definitions.111

4.6.10.3 Decoding process .112

4.6.11 Gain Control.116

4.6.11.1 Tool description.116

4.6.11.2 Definitions.117

4.6.11.3 Decoding process .117

4.6.11.4 Diagrams .121

4.6.11.5 Tables .122

4.6.12 Perceptual Noise Substitution (PNS) .123

4.6.12.1 Tool description.123

4.6.12.2 Definitions.123

4.6.12.3 Decoding process .123

4.6.12.4 Integration with the intra channel prediction tools.124

4.6.12.5 Integration with other AAC tools .125

4.6.12.6 Integration into a scalable AAC-based coder (AudioObjectType AAC_scalable) .125

4.6.13 Frequency Selective Switch (FSS) Module.125

Subpart 4 3

---------------------- Page: 3 ----------------------

ISO/IEC 14496-3:1999(E) �ISO/IEC

4.6.13.1 FSS in combined TwinVQ /CELP- AAC systems:.125

4.6.13.2 FSS in combined mono / stereo scalable configurations .127

4.6.14 Upsampling filter tool.127

4.6.14.1 Tool description.127

4.6.14.2 Definitions .128

4.6.14.3 Decoding process .128

Annex 4.A (normative) Normative Tables .130

4.A.1 Huffman codebook tables for AAC-type noisless coding.130

4.A.2 Window tables .143

4.A.3 Differential scalefactor to index tables .145

4.A.4 Tables for TwinVQ.146

Annex 4.B (informative) Encoder tools .163

4.B.1 Weighted interleave vector quantization .163

4.B.2 Spectrum normalization.165

4.B.3 Psychoacoustic model.169

4.B.4 Gain control.199

4.B.5 Filterbank and block switching.200

4.B.6 Frequency domain prediction .206

4.B.7 Long Term Prediction .209

4.B.8 Temporal Noise Shaping (TNS).211

4.B.9 Joint coding .213

4.B.10 Quantization.214

4.B.11 Noiseless coding .220

4.B.12 Perceptual Noise Substitution (PNS) .222

4.B.13 Random access points for GA coded bit streams (ObjectTypes 0x1 to 0x7) .223

4.B.14 Scalable AAC with core coder.223

4.B.15 Scalable controller .225

4.B.16 Features of AAC dynamic range control.225

4 Subpart 4

---------------------- Page: 4 ----------------------

�ISO/IEC ISO/IEC 14496-3:1999(E)

Subpart 4: General Audio (GA) Coding: AAC/TwinVQ

4.1 Scope

The General Audio (GA) coding subpart of MPEG-4 Audio is mainly intended to be used for generic audio coding at

all but the lowest bitrates. Typically, GA encoding is used for complex music material in mono from 6 kbit/s per

channel and for stereo signals from 12 kbit/s per stereo signal up to broadcast quality audio at 64 kbit/s or more per

channel. MPEG-4 coded material can be represented either by a single set of data, like in MPEG-1 and MPEG-2

Audio, or by several subsets which allow the decoding at different quality levels, depending on the number of

subsets being available at the decoder side (bitrate scalability).

MPEG-2 Advanced Audio Coding (AAC) syntax (including support for multi-channel audio) is fully supported by

MPEG-4 Audio GA coding. All the features and possibilities of the MPEG-2 AAC standard also apply to MPEG-4.

AAC has been tested to allow for ITU-R ‘indistinguishable’ quality according to [4] at data rates of 320 kb/s for five

full-bandwidth channel audio signals. In MPEG-4 the tools derived from MPEG-2 AAC are available together with

other MPEG-4 GA coding tools which provide additional functionalities, like bit rate scalability and improved coding

efficiency at very low bit rates. Bit rate scalability is either achieved with only GA coding tools, or by using a

combination with an external (non-GA, e.g. CELP) core coder.

MPEG-4 GA coding is not restricted to some fixed bitrates but supports a wide range of bitrates and variable rate

coding. While efficient mono, stereo and multi-channel coding is possible using extended, MPEG-2 AAC derived

tools, the document also provides extensions to this tool set which allow mono/stereo scalability, where a mono

signal can be extracted by decoding only subsets of the encoded stereo stream.

4.1.1 Technical Overview

4.1.1.1 Encoder and Decoder Block Diagrams

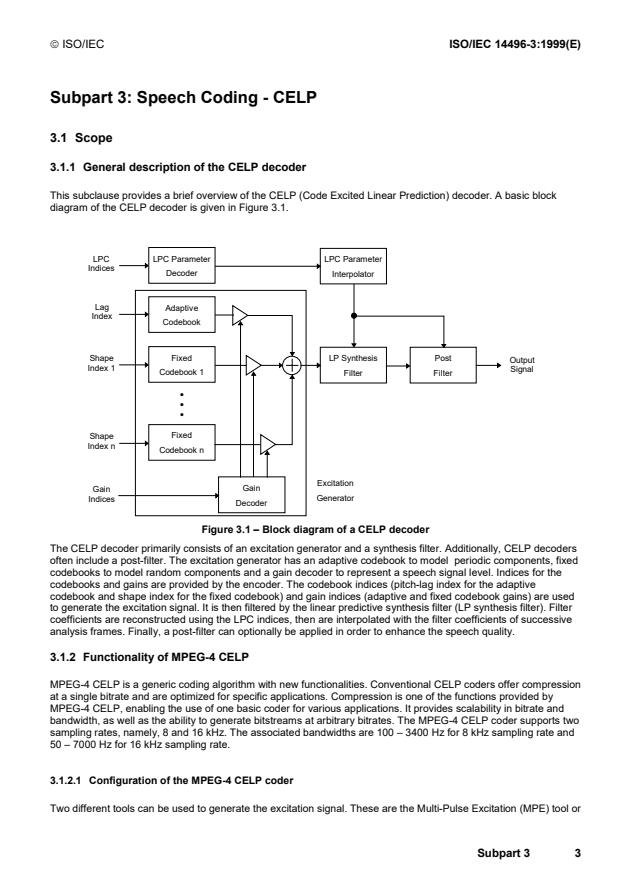

The block diagrams of the GA encoder and decoder reflect the structure of MPEG-4 GA coding. In general, there

are the MPEG-2 AAC related tools with MPEG-4 add-ons for some of them and the tools related to the Twin-VQ

quantization and coding. The Twin-VQ is an alternative module for the AAC-type quantization and it is based on an

interleaved vector quantization and LPC (Linear Predictive Coding) spectral estimation. It operates from 6 kbit/s/ch

and is recommended to be used below 16 kbit/s/ch with constant bitrate.

The basic structure of the MPEG-4 GA system is shown in Figures 4.1.1 and 4.1.2. The data flow in this diagram is

from left to right, top to bottom. The functions of the decoder are to find the description of the quantized audio

spectra in the bitstream, decode the quantized values and other reconstruction information, reconstruct the

quantized spectra, process the reconstructed spectra through whatever tools are active in the bitstream in order to

arrive at the actual signal spectra as described by the input bitstream, and finally convert the frequency domain

spectra to the time domain, with or without an optional gain control tool. Following the initial reconstruction and

scaling of the spectrum reconstruction, there are many optional tools that modify one or more of the spectra in

order to provide more efficient coding. For each of the optional tools that operate in the spectral domain, the option

to “pass through” is retained, and in all cases where a spectral operation is omitted, the spectra at its input are

passed directly through the tool without modification.

Subpart 4 5

---------------------- Page: 5 ----------------------

ISO/IEC 14496-3:1999(E) �ISO/IEC

input time signal

AAC

Gain Control

Tool

Legend:

Data

Control

Window

Length

Filterbank

Decision

Spectral Processing

TNS

Psychoacoustic Model

Perceptual

Long Term

Model

Prediction

Bark Scale to

Scalefactor Intensity/

Band Mapping Coupling

Bitstream

Prediction Formatter coded audio

stream

PNS

M/ S

AAC TwinVQ

Spectrum

Scalefactor coding

normalization and

Quantization

Noiseless coding Interleaved VQ

Quantization and Coding

Figure 4.1.1 - Block diagram GA non scalable encoder

6 Subpart 4

---------------------- Page: 6 ----------------------

�ISO/IEC ISO/IEC 14496-3:1999(E)

Legend:

Data

Control

Decoding and Inverse Quantization

AAC TwinVQ

Noiseless decoding Interleaved VQ

Inverse quantization and Spectrum

Scalefactor decoding normalization

Spectral Processing

M/ S

PNS

Bitstream

Formatter

coded audio Prediction

stream

Intensity/

Coupling

Long term

prediction

TNS

Filterbank

AAC

output time

Gain Control

signal

Tool

Figure 4.1.2 - Block diagram of the GA non scalable decoder

Subpart 4 7

---------------------- Page: 7 ----------------------

ISO/IEC 14496-3:1999(E) �ISO/IEC

4.1.1.2 Overview of the Encoder and Decoder Tools

The input to the bitstream demultiplexer tool is the MPEG-4 GA bitstream. The demultiplexer separates the

bitstream into the parts for each tool, and provides each of the tools with the bitstream information related to that

tool.

The outputs from the bitstream demultiplexer tool are:

� The quantized (and optionally noiselessly coded) spectra represented by either

� the sectioning information and the noiselessly coded spectra (AAC) or

� a set of indices of code vectors (TwinVQ)

� The M/S decision information (optional)

� The predictor side information (optional)

� The perceptual noise substitution (PNS) information (optional)

� The intensity stereo control information and coupling channel control information (both optional)

� The temporal noise shaping (TNS) information (optional)

� The filterbank control information

� The gain control information (optional)

� Bitrate scalability related side information (optional)

The AAC noiseless decoding tool takes information from the bitstream demultiplexer, parses that information,

decodes the Huffman coded data, and reconstructs the quantized spectra and the Huffman and DPCM coded

scalefactors.

The inputs to the noiseless decoding tool are:

� The sectioning information for the noiselessly coded spectra

� The noiselessly coded spectra

The outputs of the noiseless decoding tool are:

� The decoded integer representation of the scalefactors:

� The quantized values for the spectra

The inverse quantizer tool takes the quantized values for the spectra, and converts the integer values to the non-

scaled, reconstructed spectra. This quantizer is a non-uniform quantizer.

The input to the Inverse Quantizer tool is:

� The quantized values for the spectra

The output of the inverse quantizer tool is:

� The un-scaled, inversely quantized spectra

The scalefactor tool converts the integer representation of the scalefactors to the actual values, and multiplies the

un-scaled inversely quantized spectra by the relevant scalefactors.

The inputs to the scalefactors tool are:

� The decoded integer representation of the scalefactors

� The un-scaled, inversely quantized spectra

The output from the scalefactors tool is:

� The scaled, inversely quantized spectra

The M/S tool converts spectra pairs from Mid/Side to Left/Right under control of the M/S decision information,

improving stereo imaging quality and sometimes providing coding efficiency.

The inputs to the M/S tool are:

� The M/S decision information

� The scaled, inversely quantized spectra related to pairs of channels

The output from the M/S tool is:

� The scaled, inversely quantized spectra related to pairs of channels, after M/S decoding

Note: The scaled, inversely quantized spectra of individually coded channels are not processed by the M/S block, rather they are passed

directly through the block without modification. If the M/S block is not active, all spectra are passed through this block unmodified.

The prediction tool reverses the prediction process carried out at the encoder. This prediction process re-inserts the

redundancy that was extracted by the prediction tool at the encoder, under the control of the predictor state

8 Subpart 4

---------------------- Page: 8 ----------------------

�ISO/IEC ISO/IEC 14496-3:1999(E)

information. This tool is implemented as a second order backward adaptive predictor. The inputs to the prediction

tool are:

� The predictor state information

� The predictor side information

� The scaled, inversely quantized spectra

The output from the prediction tool is:

� The scaled, inversely quantized spectra, after prediction is applied.

Note: If the prediction is disabled, the scaled, inversely quantized spectra are passed directly through the block without modification.

Alternatively, there is a forward adaptive long term prediction tool provided. The inputs to the long term prediction

tool are:

� The reconstructed time domain output of the decoder

� The scaled, inversely quantized spectra

The output from the long term prediction tool is:

� The scaled, inversely quantized spectra, after prediction is applied.

Note: If the prediction is disabled, the scaled, inversely quantized spectra are passed directly through the block without modification.

The perceptual noise substitution (PNS) tool implements noise substitution decoding on channel spectra by

providing an efficient representation for noise-like signal components.

The inputs to the perceptual noise substitution tool are:

� The inversely quantized spectra

� The perceptual noise substitution control information

The output from the perceptual noise substitution tool is:

� The inversely quantized spectra

Note: If either part of this block is disabled, the scaled, inversely quantized spectra are passed directly through this part without modification. If

the perceptual noise substitution block is not active, all spectra are passed through this block unmodified.

The intensity stereo / coupling tool implements intensity stereo decoding on pairs of spectra. In addition, it adds the

relevant data from a dependently switched coupling channel to the spectra at this point, as directed by the coupling

control information.

The inputs to the intensity stereo / coupling tool are:

� The inversely quantized spectra

� The intensity stereo control information and coupling control information

The output from the intensity stereo / coupling tool is:

� The inversely quantized spectra after intensity and coupling channel decoding.

Note: If either part of this block is disabled, the scaled, inversely quantized spectra are passed directly t

...

ISO/IEC 14496-3:1999(E)

����ISO/IEC

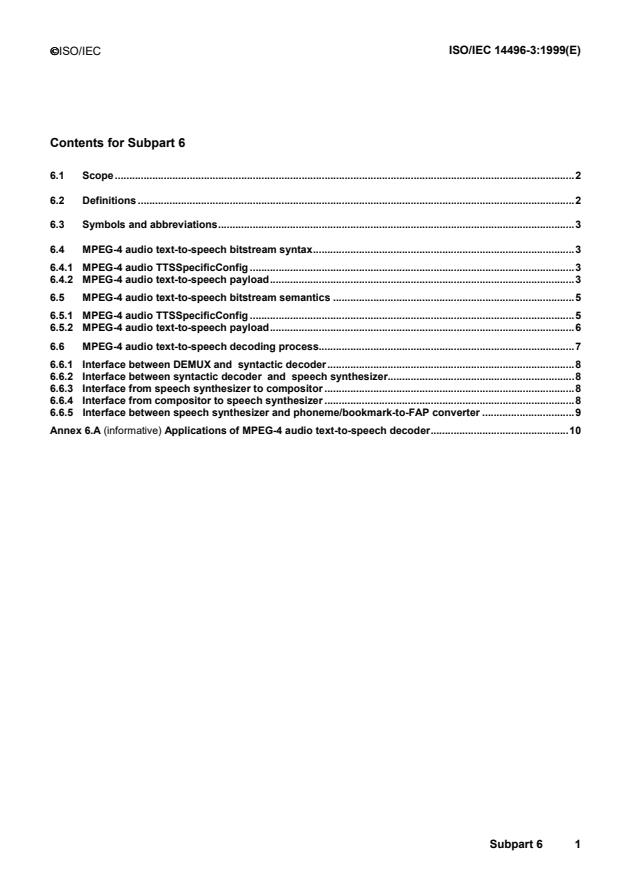

Contents for Subpart 6

6.1 Scope.2

6.2 Definitions .2

6.3 Symbols and abbreviations.3

6.4 MPEG-4 audio text-to-speech bitstream syntax.3

6.4.1 MPEG-4 audio TTSSpecificConfig .3

6.4.2 MPEG-4 audio text-to-speech payload.3

6.5 MPEG-4 audio text-to-speech bitstream semantics .5

6.5.1 MPEG-4 audio TTSSpecificConfig .5

6.5.2 MPEG-4 audio text-to-speech payload.6

6.6 MPEG-4 audio text-to-speech decoding process.7

6.6.1 Interface between DEMUX and syntactic decoder.8

6.6.2 Interface between syntactic decoder and speech synthesizer.8

6.6.3 Interface from speech synthesizer to compositor .8

6.6.4 Interface from compositor to speech synthesizer .8

6.6.5 Interface between speech synthesizer and phoneme/bookmark-to-FAP converter .9

Annex 6.A (informative) Applications of MPEG-4 audio text-to-speech decoder.10

Subpart 6 1

---------------------- Page: 1 ----------------------

ISO/IEC 14496-3:1999(E)

�ISO/IEC

Subpart 6 : TTSI

6.1 Scope

This subpart of ISO/IEC 14496-3 specifies the coded representation of MPEG-4 Audio Text-to-Speech (M-TTS)

and its decoder for high quality synthesized speech and for enabling various applications. The exact synthesis

method is not a standardization issue partly because there are already various speech synthesis techniques.

This subpart of ISO/IEC 14496-3 is intended for application to M-TTS functionalities such as those for facial

animation (FA) and moving picture (MP) interoperability with a coded bitstream. The M-TTS functionalities include a

capability of utilizing prosodic information extracted from natural speech. They also include the applications to the

speaking device for FA tools and a dubbing device for moving pictures by utilizing lip shape and input text

information.

The text-to-speech (TTS) synthesis technology is recently becoming a rather common interface tool and begins to

play an important role in various multimedia application areas. For instance, by using TTS synthesis functionality,

multimedia contents with narration can be easily composed without recording natural speech sound. Moreover,

TTS synthesis with facial animation (FA) / moving picture (MP) functionalities would possibly make the contents

much richer. In other words, TTS technology can be used as a speech output device for FA tools and can also be

used for MP dubbing with lip shape information. In MPEG-4, common interfaces only for the TTS synthesizer and

for FA/MP interoperability are defined. The M-TTS functionalities can be considered as a superset of the

conventional TTS framework. This TTS synthesizer can also utilize prosodic information of natural speech in

addition to input text and can generate much higher quality synthetic speech. The interface bitstream format is

strongly user-friendly: if some parameters of the prosodic information are not available, the missed parameters are

generated by utilizing preestablished rules. The functionalities of the M-TTS thus range from conventional TTS

synthesis function to natural speech coding and its application areas, i.e., from a simple TTS synthesis function to

those for FA and MP.

6.2 Definitions

6.2.1 International Phonetic Alphabet; IPA : The worldwide agreed symbol set to represent various phonemes

appearing in human speech.

6.2.2 lip shape pattern : A number that specifies a particular pattern of the preclassified lip shape.

6.2.3 lip synchronization : A functionality that synchronizes speech with corresponding lip shapes.

6.2.4 MPEG-4 Audio Text-to-Speech Decoder : A device that produces synthesized speech by utilizing the M-

TTS bitstream while supporting all the M-TTS functionalities such as speech synthesis for FA and MP dubbing.

6.2.5 moving picture dubbing : A functionality that assigns synthetic speech to the corresponding moving picture

while utilizing lip shape pattern information for synchronization.

6.2.6 M-TTS sentence : This defines the information such as prosody, gender, and age for only the corresponding

sentence to be synthesized.

6.2.7 M-TTS sequence : This defines the control information which affects all M-TTS sentences that follow this M-

TTS sequence.

6.2.8 phoneme/bookmark-to-FAP converter : A device that converts phoneme and bookmark information to

FAPs.

6.2.9 text-to-speech synthesizer : A device producing synthesized speech according to the input sentence

character strings.

6.2.10 trick mode : A set of functions that enables stop, play, forward, and backward operations for users.

2 Subpart 6

---------------------- Page: 2 ----------------------

ISO/IEC 14496-3:1999(E)

����ISO/IEC

6.3 Symbols and abbreviations

F0 fundamental frequency (pitch frequency)

DEMUX demultiplexer

FA facial animation

FAP facial animation parameter

ID identifier

IPA International Phonetic Alphabet

MP moving picture

M-TTS MPEG-4 Audio TTS

STOD story teller on demand

TTS text-to-speech

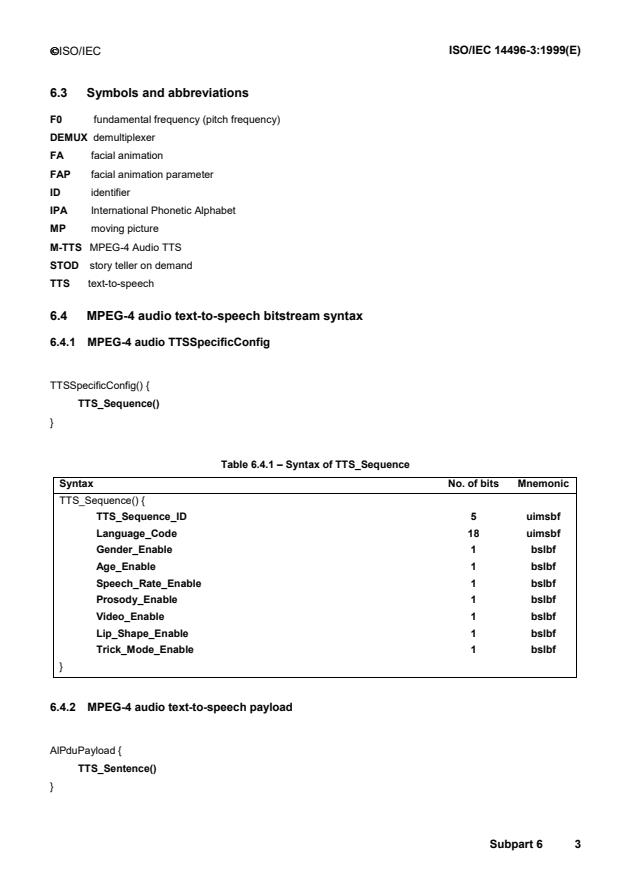

6.4 MPEG-4 audio text-to-speech bitstream syntax

6.4.1 MPEG-4 audio TTSSpecificConfig

TTSSpecificConfig() {

TTS_Sequence()

}

Table 6.4.1 – Syntax of TTS_Sequence

Syntax No. of bits Mnemonic

TTS_Sequence() {

TTS_Sequence_ID 5 uimsbf

Language_Code 18 uimsbf

Gender_Enable 1 bslbf

Age_Enable 1 bslbf

Speech_Rate_Enable 1 bslbf

Prosody_Enable 1 bslbf

Video_Enable 1 bslbf

Lip_Shape_Enable 1 bslbf

Trick_Mode_Enable 1 bslbf

}

6.4.2 MPEG-4 audio text-to-speech payload

AlPduPayload {

TTS_Sentence()

}

Subpart 6 3

---------------------- Page: 3 ----------------------

ISO/IEC 14496-3:1999(E)

�ISO/IEC

Table 6.4.2 – Syntax of TTS_Sequence

Syntax No. of bits Mnemonic

TTS_Sentence() {

TTS_Sentence_ID 10 uimsbf

Silence 1bslbf

if (Silence) {

Silence_Duration 12 uimsbf

}

else {

if (Gender_Enable) {

Gender 1 bslbf

}

if (Age_Enable) {

Age 3 uimsbf

}

if (!Video_Enable && Speech_Rate_Enable) {

Speech_Rate 4 uimsbf

}

Length_of_Text 12 uimsbf

for (j=0; j

TTS_Text 8 bslbf

}

if (Prosody_Enable) {

Dur_Enable 1 bslbf

F0_Contour_Enable 1 bslbf

Energy_Contour_Enable 1 bslbf

Number_of_Phonemes 10 uimsbf

Phoneme_Symbols_Length 13 uimsbf

for (j=0 ; j

Phoneme_Symbols 8 bslbf

}

for (j=0 ; j

if(Dur_Enable) {

Dur_each_Phoneme 12 uimsbf

}

if (F0_Contour_Enable) {

Num_F0 5 uimsbf

for (k=0; k

F0_Contour_each_Phoneme 8 uimsbf

F0_Contour_each_Phoneme_Time 12 uimsbf

}

}

if (Energy_Contour_Enable) {

4 Subpart 6

---------------------- Page: 4 ----------------------

ISO/IEC 14496-3:1999(E)

����ISO/IEC

Energy_Contour_each_Phoneme 8*3=24 uimsbf

}

}

}

if (Video_Enable) {

Sentence_Duration 16 uimsbf

Position_in_Sentence 16 uimsbf

Offset 10 uimsbf

}

if (Lip_Shape_Enable) {

Number_of_Lip_Shape 10 uimsbf

for (j=0 ; j

Lip_Shape_in_Sentence 16 uimsbf

Lip_Shape 8 uimsbf

}

}

}

}

6.5 MPEG-4 audio text-to-speech bitstream semantics

6.5.1 MPEG-4 audio TTSSpecificConfig

TTS_Sequence_ID This is a five-bit ID to uniquely identify each TTS object appearing in one scene. Each

speaker in a scene will have distinct TTS_Sequence_ID.

Language_Code When this is "00" (00110000 00110000 in binary), the IPA is to be sent. In all other languages,

this is the ISO 639 Language Code. In addition to this 16 bits, two bits that represent dialects of each language is

added at the end (user defined).

Gender_Enable This is a one-bit flag which is set to ‘1’ when the gender information exists.

Age_Enable This is a one-bit flag which is set to ‘1’ when the age information exists.

Speech_Rate_Enable This is a one-bit flag which is set to ‘1’ when the speech rate information exists.

Prosody_Enable This is a one-bit flag which is set to ‘1’ when the prosody information exists.

Video_Enable This is a one-bit flag which is set to ‘1’ when the M-TTS decoder works with MP. In this case, M-

TTS should synchronize synthetic speech to MP and accommodate the functionality of ttsForward and

ttsBackward. When VideoEnable flag is set, M-TTS decoder uses system clock to select adequate TTS_Sentence

frame and fetches Sentence_Duration, Position_in_Sentence, Offset data. TTS synthesizer assigns appropriate

duration for each phoneme to meet Sentence_Duration. The starting point of speech in a sentence is decided by

Position_in_Sentence. If Position_in_Sentence equals 0 (the starting point is the initial of sentence), TTS uses

Offset as a delay time to synchronize synthetic speech to MP.

Lip_Shape_Enable This is a one-bit flag which is set to ‘1’ when the coded input bitstream has lip shape

information. With lip shape information, M-TTS request FA tool to change lip shape according to timing information

(Lip_Shape_in_Sentence) and predifined lip shape pattern.

Trick_Mode_Enable This is a one-bit flag which is set to ‘1’ when the coded input bitstream permits trick mode

functions such as stop, play, forward, and backward.

Subpart 6 5

---------------------- Page: 5 ----------------------

ISO/IEC 14496-3:1999(E)

�ISO/IEC

6.5.2 MPEG-4 audio text-to-speech payload

TTS_Sentence_ID This is a ten-bit ID to uniquely identify a sentence in the M-TTS text data sequence for

indexing purpose. The first five bits equal to the TTS_Sequence_ID of the speaker defined in subclause 6.1, and

the rest five bits are the sequential sentence number of each TTS object.

Silence This is a one-bit flag which is set to ‘1’ when the current position is silence.

Silence_Duration This defines the time duration of the current silence segment in milliseconds. It has a value

from 1 to 4095. The value ‘0’ is prohibited.

Gender This is a one-bit which is set to ‘1’ if the gender of the synthetic speech producer is male and ‘0’, if female.

Age This represents the age of the speaker for synthetic speech. The meaning of age is defined in Table 6.5.1.

Table 6.5.1 – Age mapping table

Age age of the speaker

000 below 6

001 6 - 12

010 13 - 18

011 19 - 25

100 26 - 34

101 35 - 45

110 45 - 60

111 over 60

Speech_Rate This defines the synthetic speech rate in 16 levels. The level 8 corresponds the normal speed of

the speaker defined in the current speech synthesizer, the level 0 corresponds to the slowest speed of the speech

synthesizer, and the level 15 corresponds to the fastest speed of the speech synthesizer.

Length_

...

� ISO/IEC ISO/IEC 14496-3:1999(E)

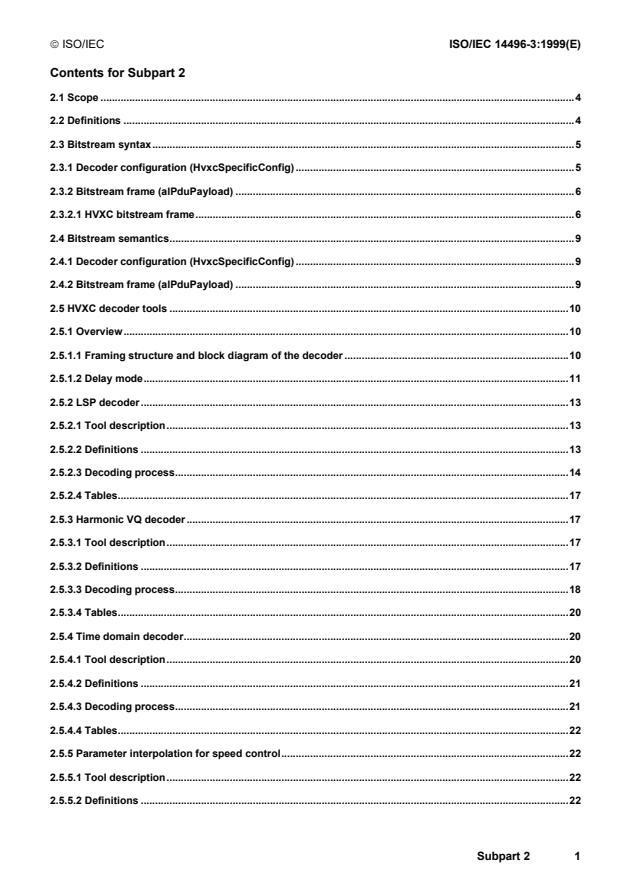

Contents for Subpart 2

2.1 Scope .4

2.2 Definitions .4

2.3 Bitstream syntax.5

2.3.1 Decoder configuration (HvxcSpecificConfig).5

2.3.2 Bitstream frame (alPduPayload) .6

2.3.2.1 HVXC bitstream frame.6

2.4 Bitstream semantics.9

2.4.1 Decoder configuration (HvxcSpecificConfig).9

2.4.2 Bitstream frame (alPduPayload) .9

2.5 HVXC decoder tools .10

2.5.1 Overview.10

2.5.1.1 Framing structure and block diagram of the decoder .10

2.5.1.2 Delay mode.11

2.5.2 LSP decoder.13

2.5.2.1 Tool description.13

2.5.2.2 Definitions .13

2.5.2.3 Decoding process.14

2.5.2.4 Tables.17

2.5.3 Harmonic VQ decoder .17

2.5.3.1 Tool description.17

2.5.3.2 Definitions .17

2.5.3.3 Decoding process.18

2.5.3.4 Tables.20

2.5.4 Time domain decoder.20

2.5.4.1 Tool description.20

2.5.4.2 Definitions .21

2.5.4.3 Decoding process.21

2.5.4.4 Tables.22

2.5.5 Parameter interpolation for speed control.22

2.5.5.1 Tool description.22

2.5.5.2 Definitions .22

Subpart 2 1

---------------------- Page: 1 ----------------------

ISO/IEC 14496-3:1999(E) � ISO/IEC

2.5.5.3 Speed control process .22

2.5.6 Voiced component synthesizer.24

2.5.6.1 Tool description.24

2.5.6.2 Definitions .25

2.5.6.3 Synthesis process .26

2.5.7 Unvoiced component synthesizer .34

2.5.7.1 Tool description.34

2.5.7.2 Definitions .34

2.5.7.3 Synthesis process .34

2.5.8 Variable rate decoder .36

2.5.8.1 Tool description.36

2.5.8.2 Definitions .36

2.5.8.3 Decoding process.37

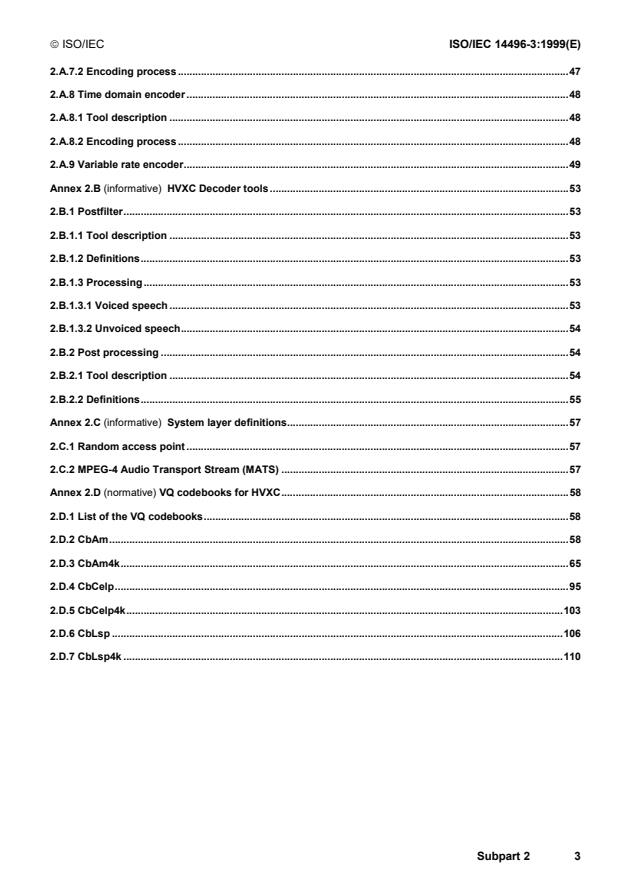

Annex 2.A (informative) HVXC Encoder tools .39

2.A.1 Overview of encoder tools .39

2.A.2 Normalization.39

2.A.2.1 Tool description .39

2.A.2.2 Normalization process.39

2.A.3 Pitch estimation.43

2.A.3.1 Tool description .43

2.A.3.2 Pitch estimation process.43

2.A.3.3 Pitch tracking.43

2.A.4 Harmonic magnitudes extraction .45

2.A.4.1 Tool description .45

2.A.4.2 Harmonic magnitudes extraction process .45

2.A.5 Perceptual weighting .46

2.A.5.1 Tool description .46

2.A.6 Harmonic VQ encoder.46

2.A.6.1 Tool description .46

2.A.6.2 Encoding process .46

2.A.7 V/UV decision .47

2.A.7.1 Tool description .47

2 Subpart 2

---------------------- Page: 2 ----------------------

� ISO/IEC ISO/IEC 14496-3:1999(E)

2.A.7.2 Encoding process .47

2.A.8 Time domain encoder .48

2.A.8.1 Tool description .48

2.A.8.2 Encoding process .48

2.A.9 Variable rate encoder.49

Annex 2.B (informative) HVXC Decoder tools .53

2.B.1 Postfilter.53

2.B.1.1 Tool description .53

2.B.1.2 Definitions.53

2.B.1.3 Processing.53

2.B.1.3.1 Voiced speech .53

2.B.1.3.2 Unvoiced speech.54

2.B.2 Post processing .54

2.B.2.1 Tool description .54

2.B.2.2 Definitions.55

Annex 2.C (informative) System layer definitions.57

2.C.1 Random access point .57

2.C.2 MPEG-4 Audio Transport Stream (MATS) .57

Annex 2.D (normative) VQ codebooks for HVXC.58

2.D.1 List of the VQ codebooks.58

2.D.2 CbAm.58

2.D.3 CbAm4k.65

2.D.4 CbCelp.95

2.D.5 CbCelp4k.103

2.D.6 CbLsp .106

2.D.7 CbLsp4k .110

Subpart 2 3

---------------------- Page: 3 ----------------------

ISO/IEC 14496-3:1999(E) � ISO/IEC

Subpart 2: Speech coding - HVXC

2.1 Scope

MPEG-4 parametric speech coding uses Harmonic Vector eXcitation Coding (HVXC) algorithm, where harmonic

coding of LPC residual signals for voiced segments and Vector eXcitation Coding (VXC) for unvoiced segments are

employed. HVXC allows coding of speech signals at 2.0 kbps and 4.0 kbps with a scalable scheme, where 2.0

kbps decoding is possible not only using the 2.0 kbps bit-stream but also using a 4.0 kbps bit-stream. HVXC also

provides variable bit rate coding where a typical average bit-rate is around 1.2-1.7 kbit/s. Independent change of

speed and pitch during decoding is possible, which is a powerful functionality for fast data base search. The frame

length is 20 ms, and one of four different algorithmic delays, 33.5 ms, 36ms, 53.5 ms, 56 ms can be selected.

Bitstream HVXC Decoder

Decoder and Synthesiser

Pitch control factor

Speed control factor

Figure 2.1.1 - Block diagram of the parametric speech decoder

2.2 Definitions

2.2.1. DFT: Discrete Fourier Transform.

2.2.2. dimension conversion: A method to convert a dimension of a vector by a combination of low pass filtering

and linear interpolation.

2.2.3. excitation: A time domain signal which excites the LPC synthesis filter.

2.2.4. FFT: Fast Fourier Transform.

2.2.5. fundamental frequency: A parameter which represents signal periodicity in frequency domain.

2.2.6. harmonics: Samples of frequency spectrum at multiples of the fundamental frequency.

2.2.7. harmonic magnitude: Magnitude of each harmonic.

2.2.8. harmonic synthesis: A method to obtain a periodic excitation from harmonic magnitudes.

2.2.9. HVXC: Harmonic Vector eXcitation Coding (parametric speech coding)

2.2.10. IFFT: Inverse Fast Fourier Transform.

2.2.11. initial phase: A phase value at the onset of voiced signal in harmonic synthesis.

2.2.12. interframe prediction: A method to predict a value in the current frame from values in the previous frames.

Interframe prediction is used in VQ of LSP.

2.2.13. LPC: Linear Predictive Coding.

2.2.14. LPC synthesis filter: An IIR filter whose coefficients are LPC coefficients. This filter models the time

varying vocal tract.

2.2.15. LPC residual signal: A signal filtered by the LPC inverse filter, which has a flattened frequency spectrum.

2.2.16. LSP: Line Spectral Pairs.

2.2.17. mixed voiced frame: A speech segment which has both voiced and unvoiced components.

2.2.18. pitch: A parameter which represents signal periodicity in the time domain. It is expressed in terms of the

number of samples.

2.2.19. pitch control: A functionality to control the pitch of the synthesized speech signal without changing its

speed.

2.2.20. postfilter: A filter to enhance the perceptual quality of the synthesized speech signal.

2.2.21. sinusoidal synthesis: A method to obtain a time domain waveform by a sum of amplitude modulated

sinusoidal waveforms.

2.2.22. spectral envelope: A set of harmonic magnitudes.

4 Subpart 2

---------------------- Page: 4 ----------------------

� ISO/IEC ISO/IEC 14496-3:1999(E)

2.2.23. speed control: A functionality to control the speed of the synthesized speech signal without changing its

pitch or phonemes.

2.2.24. SQ: Scalar Quantization.

2.2.25. unvoiced frame: A speech segment which looks like random noise with no periodicity.

2.2.26. variable bit rate: The number of bits representing a coded frame varies frame by frame over time.

2.2.27. voiced frame: A speech segment which has periodicity and a relatively high energy.

2.2.28. VQ: Vector Quantization.

2.2.29. V/UV decision: Decision whether the current frame is voiced or unvoiced or mixed voiced.

2.2.30. VXC: Vector eXcitation Coding. It is also called CELP (Coded Excitation Linear Prediction). In HVXC, no

adaptive codebook is used.

2.2.31. white Gaussian noise: A noise sequence which has a Gaussian distribution.

A general glossary and list of symbols and abbreviations is located in Section 1.

2.3 Bitstream syntax

An MPEG-4 Natural Audio Object HVXC object type is transmitted in one or two Elementary Streams: The base

layer stream and an optional enhancement layer stream.

The bitstream syntax is described in pseudo-C code.

2.3.1 Decoder configuration (HvxcSpecificConfig)

The decoder configuration information for HVXC object type is transmitted in the DecoderConfigDescriptor() of the

base layer and the optional enhancement layer Elementary Stream (see Section 1, clause 1.6).

HVXC Base Layer -- Configuration

For HVXC object type in unscalable mode or as base layer in scalable mode the following HvxcSpecificConfig() is

required:

HvxcSpecificConfig() {

HVXCconfig();

}

HVXC Enhancement Layer -- Configuration

HVXC object type provides a 2 kbit/s base layer plus a 2 kbit/s enhancement layer scalable mode. In this scalable

mode the basic layer configuration must be as follows:

HVXCvarMode = 0 HVXC fixed bit rate

HVXCrateMode = 0 HVXC 2 kbps

For the enhancement layer, there is no HvxcSpecificConfig() required:

HvxcSpecificConfig() {

}

Table 2.3.1 - Syntax of HVXCconfig()

Syntax No. of bits Mnemonic

HVXCconfig()

{

HVXCvarMode 1 uimsbf

HVXCrateMode 2 uimsbf

extensionFlag 1 uimsbf

if (extensionFlag) {

}

}

Subpart 2 5

---------------------- Page: 5 ----------------------

ISO/IEC 14496-3:1999(E) � ISO/IEC

Table 2.3.2 - HVXCvarMode

HVXCvarMode Description

0 HVXC fixed bit rate

1 HVXC variable bit rate

Table 2.3.3 - HVXCrateMode

HVXCrateMode HVXCrate Description

0 2000 HVXC 2 kbit/s

1 4000 HVXC 4 kbit/s

2 3700 HVXC 3.7 kbit/s

3 (reserved)

Table 2.3.4 - HVXC constants

NUM_SUBF1 NUM_SUBF2

24

2.3.2 Bitstream frame (alPduPayload)

The dynamic data for HVXC object type is transmitted as AL-PDU payload in the base layer and the optional

enhancement layer Elementary Stream(see Section 1, clause 1.6).

HVXC Base Layer -- Access Unit payload

alPduPayload {

HVXCframe();

}

HVXC Enhancement Layer -- Access Unit payload

To parse and decode the HVXC enhancement layer, information decoded from the HVXC base layer is required.

alPduPayload {

HVXCenhaFrame();

}

Table 2.3.5 - Syntax of HVXCframe()

Syntax No. of bits Mnemonic

HVXCframe()

{

if (HVXCvarMode ==0) {

HVXCfixframe(HVXCrate)

}

else {

HVXCvarframe()

}

}

2.3.2.1 HVXC bitstream frame

Table 2.3.6 - Syntax of HVXCfixframe(rate)

Syntax No. of bits Mnemonic

HVXCfixframe(rate)

{

6 Subpart 2

---------------------- Page: 6 ----------------------

� ISO/IEC ISO/IEC 14496-3:1999(E)

if (rate >= 2000){

idLsp1()

idVUV()

idExc1()

}

if (rate >= 3700){

idLsp2()

idExc2(rate)

}

}

Table 2.3.7 - Syntax of HVXCenhaFrame()

Syntax No. of bits Mnemonic

HVXCenhaFrame()

{

idLsp2()

idExc2(4000)

}

Table 2.3.8 - Syntax of idLsp1()

Syntax No. of bits Mnemonic

idLsp1()

{

LSP1 5 uimsbf

LSP2 7 uimsbf

LSP3 5 uimsbf

LSP4 1 uimsbf

}

Table 2.3.9 - Syntax of idLsp2()

Syntax No. of bits Mnemonic

idLsp2()

{

LSP5 8 uimsbf

}

Table 2.3.10 - Syntax of idVUV()

Syntax No. of bits Mnemonic

idVUV()

{

VUV 2 uimsbf

}

Table 2.3.11 - VUV (for fixed bit rate mode)

VUV Description

0 Unvoiced Speech

1 Mixed Voiced Speech-1

2 Mixed Voiced Speech-2

3 Voiced Speech

Table 2.3.12 - Syntax of idExc1()

Syntax No. of bits Mnemonic

idExc1()

{

Subpart 2 7

---------------------- Page: 7 ----------------------

ISO/IEC 14496-3:1999(E) � ISO/IEC

if (VUV!=0){

Pitch 7 uimsbf

SE_shape1 4 uimsbf

SE_shape2 4 uimsbf

SE_gain 5 uimsbf

}

else{

for (sf_num=0;sf_num

VX_shape1 [sf_num] 6 uimsbf

VX_gain1 [sf_num] 4 uimsbf

}

}

}

Table 2.3.13 - Syntax of idExc2(rate)

Syntax No. of bits Mnemonic

idExc2(rate)

{

if (VUV!=0){

SE_shape3 7 uimsbf

SE_shape4 10 uimsbf

SE_shape5 9 uimsbf

if (rate>=4000){

SE_shape6 6 uimsbf

}

}

else{

for (sf_num=0;sf_num

VX_shape2[sf_num] 5 uimsbf

VX_gain2[sf_num] 3 uimsbf

}

if (rate>=4000){

VX_shape2[3] 5 uimsbf

VX_gain2[3] 3 uimsbf

}

}

}

idLsp1(), idExc1(), idVUV() are treated as base layer in case of scalable mode.

idLsp2(), idExc2() are treated as enhancement layer in case of scalable mode.

Table 2.3.14 - Syntax of HVXCvarframe()

Syntax No. of bits Mnemonic

HVXCvarframe()

{

idvarVUV()

idvarLsp1()

idvarExc1()

}

Table 2.3.15 - Syntax of idvarVUV()

Syntax No. of bits Mnemonic

idvarVUV()

{

VUV 2 uimsbf

}

8 Subpart 2

---------------------- Page: 8 ----------------------

� ISO/IEC ISO/IEC 14496-3:1999(E)

Table2.3.16- VUV(forvariablebit ratemode)

VUV Description

0 Unvoiced Speech

1 Background Noise

2 Mixed Voiced Speech

3 Voiced Speech

Table 2.3.17 - Syntax of idvarLsp1()

Syntax No. of bits Mnemonic

idvarLsp1()

{

if (VUV != 1){

LSP1 5 uimsbf

LSP2 7 uimsbf

LSP3 5 uimsbf

LSP4 1 uimsbf

}

}

Table 2.3.18 - Syntax of idvarExc1()

Syntax No. of bits Mnemonic

idvarExc1()

{

if (VUV != 1){

if (VUV != 0){

Pitch 7 uimsbf

SE_Shape1 4 uimsbf

SE_Shape2 4 uimsbf

SE_Gain 5 uimsbf

}

else{

for (sf_num=0;sf_num

VX_gain1[sf_num] 4 uimsbf

}

}

}

}

2.4 Bitstream semantics

2.4.1 Decoder configuration (HvxcSpecificConfig)

HVXCvarMode: A flag indicating HVXC variable rate mode(Table 2.3.1).

HVXCrateMode: A 2 bit field indicating HVXC bit rate mode(Table 2.3.1).

extensionFlag: A flag indicating the presence of MPEG-4 Version 2 data (Table 2.3.1, for future use).

2.4.2 Bitstream frame (alPduPayload)

LSP1: This 5 bits field represents the index of the first stage LSP quantization (base layer, Tables 2.3.8 and

2.3.17).

LSP2: This 7 bits field represents the index of the second stage LSP quantization (base layer, Tables 2.3.8 and

2.3.17).

Subpart 2 9

---------------------- Page: 9 ----------------------

ISO/IEC 14496-3:1999(E) � ISO/IEC

LSP3: This 5 bits field represents the index of the second stage LSP quantization (base layer, Tables 2.3.8 and

2.3.17).

LSP4: This 1 bit field represents the flag whether a interframe prediction is used or not in the second stage LSP

quantization (base layer, Tables 2.3.8 and 2.3.17).

LSP5: This 8 bits field represents the index of the third stage LSP quantization (enhancement layer, Table 2.3.9).

VUV: This 2 bits field represents V/UV decision mode. It should be noted that this field has a different meaning

according to HVXC variable rate mode(Tables 2.3.10 and 2.3.15)

Pitch: This 7 bits field represents the index of the linearly quantized pitch lag ranging from 20 to 147

samples(Tables 2.3.12 and 2.3.18).

SE_shape1: This 4 bits field represents the index of the spectral envelope shape (base layer, Tables 2.3.12 and

2.3.18).

SE_shape2: This 4 bits field represents the index of the spectral envelope shape (base layer, Tables 2.3.12 and

2.3.18).

SE_gain: This 5 bits field represents the index of the spectral envelope gain (base layer, Tables 2.3.12 and

2.3.18).

VX_shape1[sf_num]: This 6 bits field represents the index of the sf_num-th subframe’s VXC shape (base layer,

Tables 2.3.12 and 2.3.18).

VX_gain1[sf_num]: This 4 bits field represents the index of the sf_num-th subframe’s VXC gain (base layer,

Tables 2.3.12 and 2.3.18).

SE_shape3: This 7 bits field represents the index of the spectral envelope shape (enhancement layer, Table

2.3.13).

SE_shape4: This 10 bits field represents the index of the spectral envelope shape (enhancement layer, Table

2.3.13).

SE_shape5: This 9 bits field represents the index of the spectral envelope shape (enhancement layer, Table

2.3.13).

SE_shape6: This 6 bits field represents the index of the spectral envelope shape (enhancement layer, Table

2.3.13).

VX_shape2[sf_num]: This 5 bits field represents the index of the sf_num-th subframe’s VXC shape

(enhancement layer, Table 2.3.13).

VX_gain2[sf_num]: This 3 bits field represents the index of the sf_num-th subframe’s VXC gain (enhancement

layer, Table 2.3.13).

2.5 HVXC decoder tools

2.5.1 Overview

HVXC provides an efficient coding scheme for Linear Predictive Coding (LPC) residuals based on harmonic and

stochastic vector representation. Vector quantization (VQ) of the spectral envelope of LPC residuals with a

weighted distortion measure is used when the signal is voiced. Vector excitation coding (VXC) is used when the

signal is unvoiced. The major algorithmic features are:

�Weighted VQ of variable dimension spectral vector.

�A fast harmonic synthesis algorithm by IFFT.

�Interpolative coder parameters for speed/pitch control

Also, functional features include:

�As low as 33.5 ms of total algorithmic delay

�2.0-4.0 kbps scalable mode

�Variable bit rate coding for rates less than 2.0 kbps

2.5.1.1 Framing structure and block diagram of the decoder

Figure 2.5.1 shows the overall structure of the HVXC decoder. The HVXC decoder tools allow decoding of speech

signals at 2 kbit/s and higher, up to 4 kbit/s. HVXC decoder tools also allow decoding with variable bit rate mode at

an average bit rate of around 1.2-1.7 kbps. The basic decoding process is composed of four steps; de-

quantization of parameters, generation of excitation signals for voiced frames by sinusoidal synthesis (harmonic

synthesis) and noise component addition, generation of excitation signals for unvoiced frames by codebook look-

up, and LPC synthesis. To enhance the synthesized speech quality spectral post-filtering is used.

10 Subpart 2

---------------------- Page: 10 ----------------------

� ISO/IEC ISO/IEC 14496-3:1999(E)

spd

Speed control

Factor

Inv. VQ

LSP of

Interpolation

LSP

of

LSP

LSP decoder

pch_mod

Pitch control

Factor

Voiced component synthesizer

Harmonic

Synthesis

Pitch

V/UV

Param.

Inverse VQ

LPC

of

Spectral

Synthesis Postfilter

Inter-

Envelope Spectral

Filter

polation

Excitation

Envelope

Output

Parameters

+ Speech

Harmonic VQ decoder

LPC

Synthesis

Postfilter

Windowing

Shape

Filter

Parameter Interpolation

Unvoiced component synthesizer

for Speed Control

Stochastic

G

Codebook

Gain

Time domain decoder

Figure 2.5.1 - Blockdiagram of the HVXC decoder

2.5.1.2 Delay mode

HVXC coder/decoder supports low/normal delay encode/decode mode, allowing any combinations of delay mode

at 2.0-4.0 kbps with a scalable scheme. The figure below shows the framing structure of each delay mode. The

frame length is 20 ms for all the delay modes. For example, use of low delay encode and low delay decode mode

results in a total coder/decoder delay of 33.5 ms.

In the encoder, the algorithmic delay could be selected to be either 26 ms or 46 ms. When 46 ms delay is selected,

one frame look ahead is used for pitch detection. When 26 ms delay is selected, only the current frame is used for

pitch detection. For both cases, syntax is common, all the quantizers are common, and bitstreams are compatible.

In the decoder the algorithmic delay can be selected to be either 10 ms (normal delay mode) or 7.5 ms (low delay

mode). When 7.5 ms delay is selected, the decoder frame interval is shifted by 2.5 ms (20 samples) compared to

the 10 ms delay mode. In this case, the excitation generation and LPC synthesis phase is shifted by 2.5 ms.

Again, for both cases, syntax is common, all the quantizers are common, and bitstreams are compatible.

In summary, any independent choice of encoder/decoder delay from the following combination is possible:

Encoder delay: 26 m

...

INTERNATIONAL ISO/IEC

STANDARD 14496-3

First edition

1999-12-15

Information technology — Coding of

audio-visual objects —

Part 3:

Audio

Technologies de l'information — Codage des objets audiovisuels —

Partie 3: Codage audio

Reference number

ISO/IEC 14496-3:1999(E)

©

ISO/IEC 1999

---------------------- Page: 1 ----------------------

ISO/IEC 14496-3:1999(E)

PDF disclaimer

This PDF file may contain embedded typefaces. In accordance with Adobe's licensing policy, this file may be printed or viewed but shall not

be edited unless the typefaces which are embedded are licensed to and installed on the computer performing the editing. In downloading this

file, parties accept therein the responsibility of not infringing Adobe's licensing policy. The ISO Central Secretariat accepts no liability in this

area.

Adobe is a trademark of Adobe Systems Incorporated.

Details of the software products used to create this PDF file can be found in the General Info relative to the file; the PDF-creation parameters

were optimized for printing. Every care has been taken to ensure that the file is suitable for use by ISO member bodies. In the unlikely event

that a problem relating to it is found, please inform the Central Secretariat at the address given below.

© ISO/IEC 1999

All rights reserved. Unless otherwise specified, no part of this publication may be reproduced or utilized in any form or by any means, electronic

or mechanical, including photocopying and microfilm, without permission in writing from either ISO at the address below or ISO's member body

in the country of the requester.

ISO copyright office

Case postale 56 � CH-1211 Geneva 20

Tel. + 41 22 749 01 11

Fax + 41 22 734 10 79

E-mail copyright@iso.ch

Web www.iso.ch

Printed in Switzerland

ii © ISO/IEC 1999 – All rights reserved

---------------------- Page: 2 ----------------------

ISO/IEC 14496-3:1999(E)

Foreword

ISO (the International Organization for Standardization) and IEC (the International Electrotechnical Commission)

form the specialized system for worldwide standardization. National bodies that are members of ISO or IEC

participate in the development of International Standards through technical committees established by the

respective organization to deal with particular fields of technical activity. ISO and IEC technical committees

collaborate in fields of mutual interest. Other international organizations, governmental and non-governmental, in

liaison with ISO and IEC, also take part in the work.

In the field of information technology, ISO and IEC have established a joint technical committee, ISO/IEC JTC 1.

Draft International Standards adopted by the joint technical committee are circulated to national bodies for voting.

Publication as an International Standard requires approval by at least 75 % of the national bodies casting a vote.

Attention is drawn to the possibility that some of the elements of this part of ISO/IEC 14496 may be the subject of

patent rights. ISO and IEC shall not be held responsible for identifying any or all such patent rights.

International Standard ISO/IEC 14496-3 was prepared by Joint Technical Committee ISO/IEC JTC 1, Information

technology, Subcommittee SC 29, Coding of audio, picture, multimedia and hypermedia information.

ISO/IEC 14496 consists of the following parts, under the general title Information technology — Coding of audio-

visual objects:

� Part 1: Systems

� Part 2: Visual

� Part 3: Audio

� Part 4: Conformance testing

� Part 5: Reference testing

� Part 6: Delivery Multimedia Integration Framework (DMIF)

Annexes 2.A to 2.C, 3.C, 4.A and 5.A form a normative part of this part of ISO/IEC 14496. Annexes 1.A, 1.B, 2.D,

3.A, 3.B, 3.D to 3.F, 4.B and 5.B to 5.G are for information only.

Due to its technical nature, this part of ISO/IEC 14496 requires a special format as several standalone electronic

files and, consequently, does not conform to some of the requirements of the ISO/IEC Directives, Part 3.

© ISO/IEC 1999 – All rights reserved iii

---------------------- Page: 3 ----------------------

INTERNATIONAL STANDARD �ISO/IEC ISO/IEC 14496-3:1999(E)

Information technology — Coding of audio-visual objects —

Part 3: Audio

Subpart 1: Main

Structure of this part of ISO/IEC 14496:

This part of ISO/IEC 14496 comprises six subparts:

Subpart 1: Main

Subpart 2: Speech coding - HVXC

Subpart 3: Speech coding - CELP

Subpart 4: General Audio coding (GA)

Subpart 5: Structured audio

Subpart 6: Text to speech interface

For reasons of manageability of large documents, this part of ISO/IEC 14496 is divided into six files, corresponding

to the six subparts of the standard:

1. a025035e.pdf contains Subpart 1.

2. b025035e.pdf contains Subpart 2.

3. c025035e.pdf contains Subpart 3.

4. d025035e.pdf contains Subpart 4.

5. e025035e.pdf contains Subpart 5.

6. f025035e.pdf contains Subpart 6.

Subpart 1 1

---------------------- Page: 4 ----------------------

ISO/IEC 14496-3:1999(E)

�ISO/IEC

Contents for Subpart 1

1.1 SCOPE .4

1.1.1 Overview of MPEG-4 Audio .4

1.1.2 New concepts in MPEG-4 Audio .4

1.1.3 MPEG-4 Audio capabilities.5

1.1.3.1 Overview of capabilities.5

1.1.3.2 MPEG-4 speech coding tools.5

1.1.3.3 MPEG-4 general audio coding tools.6

1.1.3.4 MPEG-4 Audio synthesis tools .6

1.1.3.5 MPEG-4 Audio composition tools .7

1.1.3.6 MPEG-4 Audio scalability tools.8

1.2 NORMATIVE REFERENCES .8

1.3 TERMS AND DEFINITIONS .8

1.4 SYMBOLS AND ABBREVIATIONS .10

1.4.1 Arithmetic operators .11

1.4.2 Logical operators .11

1.4.3 Relational operators.11

1.4.4 Bitwise operators .11

1.4.5 Assignment .11

1.4.6 Mnemonics.12

1.4.7 Constants .12

1.4.8 Method of describing bitstream syntax .12

1.5 TECHNICAL OVERVIEW .13

1.5.1 MPEG-4 Audio Object Types.13

1.5.1.1 Audio Object Type Definition .13

1.5.1.2 Description.14

1.5.2 Audio Profiles and Levels.15

1.5.2.1 Profiles.15

1.5.2.2 Complexity Units .16

1.5.2.3 Levels within the Profiles .17

2 Subpart 1

---------------------- Page: 5 ----------------------

ISO/IEC 14496-3:1999(E)

�ISO/IEC

1.6 INTERFACE TO MPEG-4 SYSTEMS.18

1.6.1 Introduction.18

1.6.2 Syntax.18

1.6.2.1 Audio DecoderSpecificInfo .18

1.6.2.2 HvxcSpecificConfig.19

1.6.2.3 CelpSpecificConfig.19

1.6.2.4 GASpecificConfig.19

1.6.2.5 StructuredAudioSpecificConfig.19

1.6.2.6 TTSSpecificConfig.19

1.6.2.7 Payloads.19

1.6.3 Semantics.19

1.6.3.1 AudioObjectType.19

1.6.3.2 samplingFrequency.19

1.6.3.3 samplingFrequencyIndex .19

1.6.3.4 channelConfiguration .20

ANNEX 1.A (INFORMATIVE) AUDIO INTERCHANGE FORMATS .21

1.A.1 Introduction.21

1.A.2 Interchange format streams.21

1.A.2.1 MPEG-2 AAC Audio_Data_Interchange_Format, ADIF.21

1.A.2.2 Audio_Data_Transport_Stream frame, ADTS.22

1.A.2.2.4 MPEG-4 Audio Transport Stream (MATS).23

1.A.2 Decoding of interface formats .26

1.A.2.1 Audio_Data_Interchange_Format (ADIF).26

1.A.2.2 Audio_Data_Transport_Stream (ADTS) .26

1.A.2.3 MPEG-4 Audio Transport Stream (MATS).28

ANNEX 1.B (INFORMATIVE) LIST OF PATENT HOLDERS.31

Subpart 1 3

---------------------- Page: 6 ----------------------

ISO/IEC 14496-3:1999(E)

�ISO/IEC

Subpart 1: Main

1.1 Scope

1.1.1 Overview of MPEG-4 Audio

This part of ISO/IEC 14496 (MPEG-4 Audio) is a new kind of audio standard that integrates many different types of

audio coding: natural sound with synthetic sound, low bitrate delivery with high-quality delivery, speech with music,

complex soundtracks with simple ones, and traditional content with interactive and virtual-reality content. By

standardizing individually sophisticated coding tools as well as a novel, flexible framework for audio

synchronization, mixing, and downloaded post-production, the developers of the MPEG-4 Audio standard have

created new technology for a new, interactive world of digital audio.

MPEG-4, unlike previous audio standards created by ISO/IEC and other groups, does not target a single

application such as real-time telephony or high-quality audio compression. Rather, MPEG-4 Audio is a standard

that applies to every application requiring the use of advanced sound compression, synthesis, manipulation, or

playback. The subparts that follow specify the state-of-the-art coding tools in several domains; however, MPEG-4

Audio is more than just the sum of its parts. As the tools described here are integrated with the rest of the MPEG-4

standard, exciting new possibilities for object-based audio coding, interactive presentation, dynamic soundtracks,

and other sorts of new media, are enabled.

Since a single set of tools is used to cover the needs of a broad range of applications, interoperability is a natural

feature of systems that depend on the MPEG-4 Audio standard. A system that uses a particular coder—for

example, a real-time voice communication system making use of the MPEG-4 speech coding toolset—can easily

share data and development tools with other systems, even in different domains, that use the same tool—for

example, a voicemail indexing and retrieval system making use of MPEG-4 speech coding. A multimedia terminal

that can decode the Main Profile of MPEG-4 Audio has audio capabilities that cover the entire spectrum of audio

functionality available today and in the future.

The remainder of this clause gives a more detailed overview of the capabilities and functioning of MPEG-4 Audio.

First, a discussion of concepts that have changed since the MPEG-2 audio standards is presented; then, the

MPEG-4 Audio toolset is outlined.

1.1.2 New concepts in MPEG-4 Audio

Many concepts in MPEG-4 Audio are different than those in previous MPEG Audio standards. For the benefit of

readers who are familiar with MPEG-1, MPEG-2, and MPEG-AAC, we provide a brief overview here.

� MPEG-4 has no standard for transport. In all of the MPEG-4 tools for audio and visual coding, the coding

standard ends at the point of constructing a sequence of access units that contain the compressed data. The

MPEG-4 Systems (ISO/IEC 14496-1) specification describes how to convert the individually coded objects into

a bitstream that contains a number of multiplexed sub-streams.

There is no standard mechanism for transport of this stream over a channel; this is because the broad range of

applications that can make use of MPEG-4 technology have delivery requirements that are too wide to easily

characterize with a single solution. Rather, what is standardized is an interface (the Delivery Multimedia

Interface Format, or DMIF, specified in ISO/IEC 14496-6) that describes the capabilities of a transport layer

and the communication between transport, multiplex, and demultiplex functions in encoders and decoders.

The use of DMIF and the MPEG-4 Systems bitstream specification allows transmission functions that are much

more sophisticated than are possible with previous MPEG standards.

For applications which do not require sophisticated transport functionality, object-based coding,

synchronization with other media, or other functions provided by MPEG-4 Systems, a private, not normative

transport may be used to deliver a single MPEG-4 Audio stream. An example private transport for this

purpose is given in Informative Annex A of subpart 1.

� MPEG-4 Audio encourages low-bitrate coding. Previous MPEG Audio standards have focused primarily on

transparent (undetectable) or nearly transparent coding of high-quality audio at whatever bitrate was required

to provide it. MPEG-4 provides new and improved tools for this purpose, but also standardizes (and has

tested) tools that can be used for transmitting audio at the low bitrates suitable for Internet, digital radio, or

other bandwidth-limited delivery. The tools specified in MPEG-4 are the state-of-the-art tools available for low-

bitrate coding of speech and other audio.

4 Subpart 1

---------------------- Page: 7 ----------------------

ISO/IEC 14496-3:1999(E)

�ISO/IEC

� MPEG-4 is an object-based coding standard with multiple tools. Previous MPEG Audio standards

provided a single toolset, with different configurations of that toolset specified for use in various applications.

MPEG-4 provides several toolsets that have no particular relationship to each other, each with a different target

function. The Profiles of MPEG-4 Audio (subclause 5.1) specify which of these tools are used together for

various applications.

Further, in previous MPEG standards, a single (perhaps multi-channel or multi-language) piece of content was

all that was transmitted. In MPEG-4, by contrast, the concept of a soundtrack is much more flexible. Multiple

tools may be used to transmit several audio objects; when using multiple tools together, an audio composition

system is used to create a single soundtrack from the audio substreams. User interaction, terminal capability,

and speaker configuration may be used when determining how to produce a single soundtrack from the

component objects. This capability allows significant advantages in quality and flexibility in MPEG-4 over

previous audio standards.

� MPEG-4 provides capabilities for synthetic sound. In natural sound coding, an existing sound is

compressed by a server, transmitted and decompressed at the receiver. This type of coding is the subject of

many existing standards for sound compression. MPEG-4 also standardizes a novel paradigm in which

synthetic sound descriptions, including synthetic speech and synthetic music, are transmitted and then

synthesized into sound at the receiver. Such capabilities open up new areas of very-low-bitrate but still very-

high-quality coding.

As with previous MPEG standards, MPEG-4 does not standardize methods for encoding sound. Thus, content

authors are left to their own decisions for the best method of creating bitstreams. At the present time, it is an open

problem how to automatically convert natural sound into synthetic or multi-object descriptions; therefore, most

immediate solutions will involve hand-authoring the content stream in some way. This process is similar to current

schemes for MIDI-based and multi-channel mixdown authoring of soundtracks.

1.1.3 MPEG-4 Audio capabilities

1.1.3.1 Overview of capabilities

The MPEG-4 Audio tools can be broadly organized into several categories:

1. Speech tools for the transmission and decoding of synthetic and natural speech

2. Audio tools for the transmission and decoding of recorded music and other audio soundtracks

3. Synthesis tools for very low bitrate description and transmission, and terminal-side synthesis, of

synthetic music and other sounds

4. Composition tools for object-based coding, interactive functionality, and audiovisual synchronization

5. Scalability tools for the creation of bitstreams that can be transmitted, without recoding, at several

different bitrates

Each of these types of tools will be described in more detail in the following subclauses.

1.1.3.2 MPEG-4 speech coding tools

1.1.3.2.1 Introduction

Two types of speech coding tools are provided in MPEG-4. The natural speech tools allow the compression,

transmission, and decoding of human speech, for use in telephony, personal communication, and surveillance

applications. The synthetic speech tool provides an interface to text-to-speech synthesis systems; using synthetic

speech provides very-low-bitrate operation and built-in connection with facial animation for use in low-bitrate

videoteleconferencing applications. Each of these tools will be discussed.

1.1.3.2.2 Natural speech coding

The MPEG-4 speech coding toolset covers the compression and decoding of natural speech sound at bitrates

ranging between 2 and 24 kbit/s. When the variable bitrate coding is allowed, coding at even less than 2 kbit/s,

such as average bitrate of 1.2 kbit/s, is also supported. Two basic speech coding techniques are used: One is a

parametric speech coding algorithm, HVXC (Harmonic Vector eXcitation Coding), for very low bit rates; and the

other is a CELP (Code Excited Linear Prediction) coding technique. The MPEG-4 speech coder targets

applications from mobile and satellite communications, to Internet telephony, to packaged media and speech

databases. It meets a wide range of requirements covering bitrates, functionality and sound quality and is specified

in subparts 2 and 3.

Subpart 1 5

---------------------- Page: 8 ----------------------

ISO/IEC 14496-3:1999(E)

�ISO/IEC

MPEG-4 HVXC operates at fixed bitrates between 2.0 kbit/s and 4.0 kbit/s, using a bitrate scalability technique. It

also operates at lower bitrates, typically 1.2-1.7 kbit/s, in variable bitrate mode. HVXC provides communications-

quality to near-toll-quality speech in the 100-3800 Hz band at 8kHz sampling rate. HVXC also allows independent

change of speed and pitch during decoding, which is a powerful functionality for fast access to speech databases.

MPEG-4 CELP is a well-known coding algorithm with new functionality. Conventional CELP coders offer

compression at a single bit rate and are optimized for specific applications. Compression is one of the

functionalities provided by MPEG-4 CELP, but MPEG-4 also enables the use of one basic coder in multiple

applications. It provides scalability in bitrate and bandwidth, as well as the ability to generate bitstreams at arbitrary

bitrates. The MPEG-4 CELP coder supports two sampling rates, namely, 8 and 16 kHz. The associated bandwidths

are 100 – 3800 Hz for 8 kHz sampling and 50 – 7000 Hz for 16 kHz sampling.

MPEG has conducted extensive verification testing in realistic listening conditions in order to prove the efficacy of

the speech coding toolset.

1.1.3.2.3 Text-to-speech interface

Text-to-speech (TTS) capability is becoming a rather common media type and plays an important role in various

multi-media application areas. For instance, by using TTS functionality, multimedia content with narration can be

easily created without recording natural speech sound. Before MPEG-4, however, there was no way for a

multimedia content provider to easily give instructions to an unknown TTS system. In MPEG-4, a single common

interface for TTS systems is standardized. This interface allows speech information to be transmitted in the

International Phonetic Alphabet (IPA), or in a textual (written) form of any language. It is specified in subpart 6.

The MPEG-4 TTS package, Hybrid/Multi-Level Scalable TTS Interface, can be considered as a superset of the

conventional TTS framework. This extended TTS Interface can utilize prosodic information taken from natural

speech in addition to input text and can thus generate much higher-quality synthetic speech. The interface and its

bitstream format is strongly scalable in terms of this added information; for example, if some parameters of

prosodic information are not available, a decoder can generate the missing parameters by rule. Normative

algorithms for speech synthesis and text-to-phoneme translation are not specified in MPEG-4, but to meet the goal

that underlies the MPEG-4 TTS Interface, a decoder should fully utilize all the provided information according to the

user’s requirements level.

As well as an interface to Text-to-speech synthesis systems, MPEG-4 specifies a joint coding method for phonemic

information and facial animation (FA) parameters and other animation parameters (AP). Using this technique, a

single bitstream may be used to control both the Text-to-Speech Interface and the Facial Animation visual object

decoder (see ISO/IEC 14496-2 Annex C). The functionality of this extended TTS thus ranges from conventional

TTS to natural speech coding and its application areas, from simple TTS to audio presentation with TTS and

motion picture dubbing with TTS.

1.1.3.3 MPEG-4 general audio coding tools

MPEG-4 standardizes the coding of natural audio at bitrates ranging from 6 kbit/s up to several hundred kbit/s per

audio channel for mono, two-channel-, and multi-channel-stereo signals. General high-quality compression is

provided by the use of the MPEG-2 AAC standard (ISO/IEC 13818-7), with certain improvements, within the

MPEG-4 tool set. At 64 kbit/s/channel and higher ranges, this coder has been found in verification testing under

rigorous conditions to meet the criterion of “indistinguishable quality” as defined by the European Broadcasting

Union.

Subpart 4 of MPEG-4 specifies the AAC tool set, in the General Audio coder. This coding technique uses a

perceptual filterbank, a sophisticated masking model, noise-shaping techniques, channel coupling, and noiseless

coding and bit-allocation to provide the maximum compression within the constraints of providing the highest

possible quality. Psychoacoustic coding standards developed by MPEG have represented the state-of-the-art in

this technology for nearly 10 years; MPEG-4 General Audio coding continues this tradition.

For bitrates from 6 kbit/s up to 64 kbit/s per channel, the MPEG-4 standard provides extensions to AAC and the

TwinVQ tools that allow the content author to achieve highest quality by altering the tool used depending on the bit

rate. Furthermore, various bit rate scalability options are available within the GA coder (see subclause 1.1.3.6.).

The low-bitrate techniques and scalability modes provided with this tool set have also been verified in formal tests

by MPEG.

1.1.3.4 MPEG-4 Audio synthesis tools

The MPEG-4 toolset providing general audio synthesis capability is called MPEG-4 Structured Audio, and it is

described in subpart 5 of ISO/IEC 14496-3. (There is also a tool for the transmission of synthetic speech; it is

6 Subpart 1

---------------------- Page: 9 ----------------------

ISO/IEC 14496-3:1999(E)

�ISO/IEC

described above in subclause 1.2.2 and in subpart 6). MPEG-4 Structured Audio (the SA coder) provides very

general capabilities for the description of synthetic sound, and the normative creation of synthetic sound in the

decoding terminal. High-quality stereo sound can be transmitted at bitrates from 0 kbit/s (no continuous cost) to 2-3

kbit/s for extremely expressive sound using these tools.