ISO/IEC NP 24029-2

(Main)Artificial intelligence (AI) — Assessment of the robustness of neural networks — Part 2: Methodology for the use of formal methods

Artificial intelligence (AI) — Assessment of the robustness of neural networks — Part 2: Methodology for the use of formal methods

Titre manque — Partie 2: Titre manque

General Information

Standards Content (Sample)

DRAFT INTERNATIONAL STANDARD

ISO/IEC DIS 24029-2

ISO/IEC JTC 1/SC 42 Secretariat: ANSI

Voting begins on: Voting terminates on:

2022-07-12 2022-10-04

Artificial intelligence (AI) — Assessment of the robustness

of neural networks —

Part 2:

Methodology for the use of formal methods

ICS: 35.020

THIS DOCUMENT IS A DRAFT CIRCULATED

FOR COMMENT AND APPROVAL. IT IS

THEREFORE SUBJECT TO CHANGE AND MAY

This document is circulated as received from the committee secretariat.

NOT BE REFERRED TO AS AN INTERNATIONAL

STANDARD UNTIL PUBLISHED AS SUCH.

IN ADDITION TO THEIR EVALUATION AS

BEING ACCEPTABLE FOR INDUSTRIAL,

TECHNOLOGICAL, COMMERCIAL AND

USER PURPOSES, DRAFT INTERNATIONAL

STANDARDS MAY ON OCCASION HAVE TO

BE CONSIDERED IN THE LIGHT OF THEIR

POTENTIAL TO BECOME STANDARDS TO

WHICH REFERENCE MAY BE MADE IN

Reference number

NATIONAL REGULATIONS.

ISO/IEC DIS 24029-2:2022(E)

RECIPIENTS OF THIS DRAFT ARE INVITED

TO SUBMIT, WITH THEIR COMMENTS,

NOTIFICATION OF ANY RELEVANT PATENT

RIGHTS OF WHICH THEY ARE AWARE AND TO

PROVIDE SUPPORTING DOCUMENTATION. © ISO/IEC 2022

---------------------- Page: 1 ----------------------

ISO/IEC DIS 24029-2:2022(E)

DRAFT INTERNATIONAL STANDARD

ISO/IEC DIS 24029-2

ISO/IEC JTC 1/SC 42 Secretariat: ANSI

Voting begins on: Voting terminates on:

Artificial intelligence (AI) — Assessment of the robustness

of neural networks —

Part 2:

Methodology for the use of formal methods

ICS: 35.020

COPYRIGHT PROTECTED DOCUMENT

THIS DOCUMENT IS A DRAFT CIRCULATED

FOR COMMENT AND APPROVAL. IT IS

© ISO/IEC 2022

THEREFORE SUBJECT TO CHANGE AND MAY

This document is circulated as received from the committee secretariat.

All rights reserved. Unless otherwise specified, or required in the context of its implementation, no part of this publication may

NOT BE REFERRED TO AS AN INTERNATIONALbe reproduced or utilized otherwise in any form or by any means, electronic or mechanical, including photocopying, or posting on STANDARD UNTIL PUBLISHED AS SUCH.

the internet or an intranet, without prior written permission. Permission can be requested from either ISO at the address below

IN ADDITION TO THEIR EVALUATION ASor ISO’s member body in the country of the requester. BEING ACCEPTABLE FOR INDUSTRIAL,

TECHNOLOGICAL, COMMERCIAL ANDISO copyright office

USER PURPOSES, DRAFT INTERNATIONAL

CP 401 • Ch. de Blandonnet 8

STANDARDS MAY ON OCCASION HAVE TO

BE CONSIDERED IN THE LIGHT OF THEIR

CH-1214 Vernier, Geneva

POTENTIAL TO BECOME STANDARDS TO

Phone: +41 22 749 01 11

WHICH REFERENCE MAY BE MADE IN

Reference number

Email: copyright@iso.org

NATIONAL REGULATIONS.

Website: www.iso.org ISO/IEC DIS 24029-2:2022(E)

RECIPIENTS OF THIS DRAFT ARE INVITED

Published in Switzerland

TO SUBMIT, WITH THEIR COMMENTS,

NOTIFICATION OF ANY RELEVANT PATENT

RIGHTS OF WHICH THEY ARE AWARE AND TO

© ISO/IEC 2022 – All rights reserved

PROVIDE SUPPORTING DOCUMENTATION. © ISO/IEC 2022

---------------------- Page: 2 ----------------------

ISO/IEC 24029-2:2022

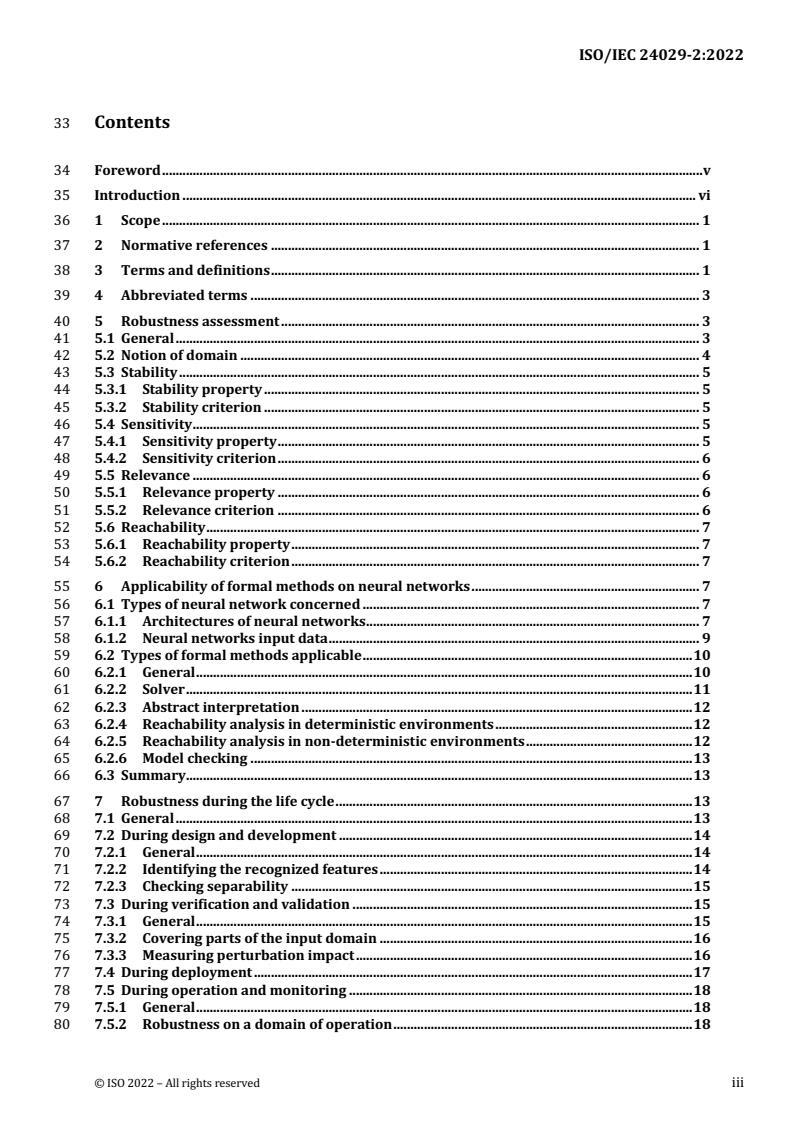

33 Contents

34 Foreword ............................................................................................................................................................... v

35 Introduction ....................................................................................................................................................... vi

36 1 Scope .............................................................................................................................................................. 1

37 2 Normative references .............................................................................................................................. 1

38 3 Terms and definitions .............................................................................................................................. 1

39 4 Abbreviated terms .................................................................................................................................... 3

40 5 Robustness assessment ........................................................................................................................... 3

41 5.1 General .......................................................................................................................................................... 3

42 5.2 Notion of domain ....................................................................................................................................... 4

43 5.3 Stability ......................................................................................................................................................... 5

44 5.3.1 Stability property ................................................................................................................................ 5

45 5.3.2 Stability criterion ................................................................................................................................ 5

46 5.4 Sensitivity ..................................................................................................................................................... 5

47 5.4.1 Sensitivity property ............................................................................................................................ 5

48 5.4.2 Sensitivity criterion ............................................................................................................................ 6

49 5.5 Relevance ..................................................................................................................................................... 6

50 5.5.1 Relevance property ............................................................................................................................ 6

51 5.5.2 Relevance criterion ............................................................................................................................ 6

52 5.6 Reachability ................................................................................................................................................. 7

53 5.6.1 Reachability property ........................................................................................................................ 7

54 5.6.2 Reachability criterion ........................................................................................................................ 7

55 6 Applicability of formal methods on neural networks ................................................................... 7

56 6.1 Types of neural network concerned ................................................................................................... 7

57 6.1.1 Architectures of neural networks .................................................................................................. 7

58 6.1.2 Neural networks input data ............................................................................................................. 9

59 6.2 Types of formal methods applicable ................................................................................................. 10

60 6.2.1 General .................................................................................................................................................. 10

61 6.2.2 Solver ..................................................................................................................................................... 11

62 6.2.3 Abstract interpretation ................................................................................................................... 12

63 6.2.4 Reachability analysis in deterministic environments .......................................................... 12

64 6.2.5 Reachability analysis in non-deterministic environments ................................................. 12

65 6.2.6 Model checking .................................................................................................................................. 13

66 6.3 Summary ..................................................................................................................................................... 13

67 7 Robustness during the life cycle ......................................................................................................... 13

68 7.1 General ........................................................................................................................................................ 13

69 7.2 During design and development ........................................................................................................ 14

70 7.2.1 General .................................................................................................................................................. 14

71 7.2.2 Identifying the recognized features ............................................................................................ 14

72 7.2.3 Checking separability ...................................................................................................................... 15

73 7.3 During verification and validation .................................................................................................... 15

74 7.3.1 General .................................................................................................................................................. 15

75 7.3.2 Covering parts of the input domain ............................................................................................ 16

76 7.3.3 Measuring perturbation impact ................................................................................................... 16

77 7.4 During deployment ................................................................................................................................. 17

78 7.5 During operation and monitoring ..................................................................................................... 18

79 7.5.1 General .................................................................................................................................................. 18

80 7.5.2 Robustness on a domain of operation ........................................................................................ 18

© ISO 2022 – All rights reserved iii---------------------- Page: 3 ----------------------

ISO/IEC 24029-2:2022

81 7.5.3 Changes in robustness ..................................................................................................................... 19

82 Bibliography ...................................................................................................................................................... 20

iv © ISO 2022 – All rights reserved---------------------- Page: 4 ----------------------

ISO/IEC 24029-2:2022

84 Foreword

85 ISO (the International Organization for Standardization) is a worldwide federation of national standards

86 bodies (ISO member bodies). The work of preparing International Standards is normally carried out

87 through ISO technical committees. Each member body interested in a subject for which a technical

88 committee has been established has the right to be represented on that committee. International

89 organizations, governmental and non-governmental, in liaison with ISO, also take part in the work. ISO

90 collaborates closely with the International Electrotechnical Commission (IEC) on all matters of

91 electrotechnical standardization.92 The procedures used to develop this document and those intended for its further maintenance are

93 described in the ISO/IEC Directives, Part 1. In particular, the different approval criteria needed for the

94 different types of ISO documents should be noted. This document was drafted in accordance with the

95 editorial rules of the ISO/IEC Directives, Part 2 (see www.iso.org/directives).

96 Attention is drawn to the possibility that some of the elements of this document may be the subject of

97 patent rights. ISO shall not be held responsible for identifying any or all such patent rights. Details of any

98 patent rights identified during the development of the document will be in the Introduction and/or on

99 the ISO list of patent declarations received (see www.iso.org/patents).100 Any trade name used in this document is information given for the convenience of users and does not

101 constitute an endorsement.102 For an explanation of the voluntary nature of standards, the meaning of ISO specific terms and

103 expressions related to conformity assessment, as well as information about ISO's adherence to the World

104 Trade Organization (WTO) principles in the Technical Barriers to Trade (TBT), see

105 www.iso.org/iso/foreword.html.106 This document was prepared by Technical Committee ISO/IEC JTC 1, Information technology,

107 Subcommittee SC 42, Artificial intelligence.108 A list of all parts in the ISO/IEC 24029 series can be found on the ISO website.

109 Any feedback or questions on this document should be directed to the user’s national standards body. A

110 complete listing of these bodies can be found at www.iso.org/members.html.111

112

© ISO 2022 – All rights reserved v

---------------------- Page: 5 ----------------------

ISO/IEC 24029-2:2022

113 Introduction

114 Neural networks are widely used to perform complex tasks in various contexts, such as image or natural

115 language processing, and predictive maintenance. AI system quality models comprise certain

116 characteristics, including robustness. For example, ISO/IEC 25059:— [1], which extends the SQuaRE

117 series [2] to AI systems, considers in its quality model that robustness is a sub-characteristic of reliability.

118 Demonstrating the ability of a system to maintain its level of performance under varying conditions can

119 be done using statistical analysis, but proving it requires some form of formal analysis. In that regard

120 formal methods can be complementary to other methods in order to increase the trust in the robustness

121 of the neural network.122 Formal methods are mathematical techniques for rigorous specification and verification of software and

123 hardware systems with the goal to prove their correctness. Formal methods can be used to formally

124 reason about neural networks and prove whether they satisfy relevant robustness properties. For

125 example, consider a neural network classifier that takes as input an image and outputs a label from a fixed

126 set of classes (such as car or airplane). Such a classifier can be formalized as a mathematical function that

127 takes the pixel intensities of an image as input, computes the probabilities for each possible class from

128 the fixed set, and returns a label corresponding to the highest probability. This formal model can then be

129 used to mathematically reason about the neural network when the input image is modified. For example,

130 suppose we are given a concrete image for which the neural network outputs the label “car”. We can ask

131 the question: “can the network output a different label if we arbitrarily modify the value of an arbitrary

132 pixel in the image?” This question can be formulated as a formal mathematical statement that is either

133 true or false for a given neural network and image.134 A classical approach to using formal methods consists of three main steps that are described in this

135 document. First, the system to be analyzed is formally defined in a model that precisely captures all

136 possible behaviours of the system. Then, a requirement is mathematically defined. Finally, a formal

137 method, such as solver, abstract interpretation or model checking, is used to assess whether the system

138 meets the given requirement, yielding either a proof, a counterexample or an inconclusive result.

139 This document provides the methodology including recommendations and requirements on the use of

140 formal methods to assess the robustness of neural networks during their life cycle. The document covers

141 several available formal method techniques. At each step of the life cycle, the document presents criteria

142 that are applicable to assess the robustness of neural network and to establish how neural networks are

143 verified by formal methods. Formal methods can have issues in terms of scalability, however they are still

144 applicable to all types of neural networks performing various tasks on several data types. While formal

145 methods have been used on traditional software systems for a while, the use of formal methods on neural

146 networks is fairly recent and is still an active field of investigation.147 This document is aimed at helping artificial intelligence engineers and quality engineers who use neural

148 networks and who have to assess their robustness throughout their life cycle. The reader can also refer

149 to ISO/IEC TR 24029-1:2021 [3] to have a more detailed overview of the techniques available to assess

150 the robustness of neural networks, beyond the formal methods used by this document.

151152

153

Under preparation. Stage at the time of publication: ISO/IEC CD 25059:2021.

vi © ISO 2022 – All rights reserved

---------------------- Page: 6 ----------------------

ISO/IEC 24029-2:2022

154 Information technology — Artificial Intelligence (AI) —

155 Assessment of the robustness of neural networks — Part 2:

156 Methodology for the use of formal methods

157 1 Scope

158 This document provides methodology for the use of formal methods to assess robustness

159 properties of neural networks. The document focuses on how to select, apply and manage formal

160 methods to prove robustness properties.161 2 Normative references

162 The following documents are referred to in the text in such a way that some or all of their content

163 constitutes requirements of this document. For dated references, only the edition cited applies. For

164 undated references, the latest edition of the referenced document (including any amendments)

165 applies.166 ISO/IEC 22989:— , Information Technology — Artificial intelligence — Artificial intelligence

167 concepts and terminology168 ISO/IEC 23053:— , Framework for Artificial Intelligence (AI) Systems Using Machine Learning (ML)

169 3 Terms and definitions170 For the purposes of this document, the terms and definitions given in ISO/IEC 22989:—, ISO/IEC

171 23053:— and the following apply.172 ISO and IEC maintain terminological databases for use in standardization at the following

173 addresses:174 — ISO Online browsing platform: available at https://www.iso.org/obp

175 — IEC Electropedia: available at http://www.electropedia.org/

176 3.1

177 architecture

178 fundamental concepts or properties of a system in its environment embodied in its elements,

179 relationships, and in the principles of its design and evolution180 [SOURCE: ISO/IEC/IEEE 42010:2011, 3.2]

181 3.2

182 attribute

183 property or characteristic of an object that can be distinguished quantitatively or qualitatively by

184 human or automated means185 [SOURCE: ISO/IEC/IEEE 15939:2017, 3.2]

186 3.3

187 bounded domain

188 set containing a finite number of objects

Under preparation. Stage at the time of publication: ISO/IEC FDIS 22989:2022.

Under preparation. Stage at the time of publication: ISO/IEC FDIS 23053:2022.

© ISO 2022 – All rights reserved 1

---------------------- Page: 7 ----------------------

ISO/IEC 24029-2:2022

189 EXAMPLE 1: The domain of all valid 8-bit RGB images with n-pixels is bounded by its size which is at most

�.�190 256 .

191 EXAMPLE 2: The number of all valid English sentences is infinite; therefore this domain is unbounded.

192 Note 1 to entry: The number of objects in an unbounded domain can be infinite.

193 3.4194 bounded object

195 object represented by a finite number of attributes

196 Note 1 to entry: Contrary to a bounded object, an unbounded object is represented with an infinite number

197 of attributes.198 3.5

199 criteria

200 criterion

201 rules on which a judgment or decision can be based, or by which a product, service, result, or

202 process can be evaluated203 [SOURCE: ISO/IEC/IEEE 15289:2019(en), 3.1.6, added criterion as admitted term]

204 3.6205 domain

206 set of possible inputs to a neural network characterized by attributes of the environment

207 EXAMPLE 1: A neural network performing a natural language processing task is manipulating texts

208 composed of words. Even though the number of possible different texts is unbounded, the maximum length

209 of each sentence is always bounded. An attribute describing this domain can therefore be the maximum

210 length allowed for each sentence.211 EXAMPLE 2: A face capture requirements can include, inter alia, that the size of faces is at least 40x40 pixels.

212 That half-profile faces are detectable at a lower level of accuracy, provided most of the facial features are still

213 visible. Similarly, partial occlusions are handled to some extent. Detection typically requires that more than

214 70% of the face is visible. Views where the camera is the same height as the face perform best and

215 performance degrades as the view moves above 30 degrees or below 20 degrees from straight on.

216 Note 1 to entry: An attribute is used to describe a bounded object even though the domain can be unbounded.

217 3.7218 model

219 formal expression of a theory

220 3.8

221 stability

222 extent to which the output of a neural network remains the same when its inputs are changed

223 Note 1 to entry: Stability is not responding to change when input change is noise.

224 3.9225 sensitivity

226 extent to which the output of a neural network varies when its inputs are changed

227 Note 1 to entry: Sensitivity is responding to change when input change is informative.

228 3.10229 time series

230 sequence of values sampled at successive points in time

231 [SOURCE: ISO/IEC 19794-7:2007(en), 4.2]

2 © ISO 2022 – All rights reserved

---------------------- Page: 8 ----------------------

ISO/IEC 24029-2:2022

232 4 Abbreviated terms

233 AI artificial intelligence

234 BNN binarized neural networks

235 MILP mixed-integer linear programming

236 MRI magnetic resonance imaging

237 PLNN piecewise linear neural networks

238 ReLU rectified linear unit

239 RNN recurrent neural networks

240 SAR synthetic aperture radar

241 SMC satisfiability modulo convex

242 SMT satisfiability modulo theories

243 5 Robustness assessment

244 5.1 General

245 In the context of neural networks, robustness specifications typically represent different conditions

246 that can naturally or adversarially change in the domain (see Clause 5.2) in which the neural

247 network is deployed.248 EXAMPLE 1: Consider a neural network that processes medical images, where inputs fed to the neural

249 network are collected with a medical device that scans patients. Taking multiple images of the same patient

250 naturally does not produce identical images. This is because the orientation of the patient can slightly change,

251 the lighting in the room can change, an object can be reflected or random noise can be added by image post-

252 processing steps.253 EXAMPLE 2: Consider a neural network that processes the outputs of sensors and onboard cameras of an

254 self-driving vehicle. Due to the dynamic nature of the outside world, such as weather conditions, pollution

255 and lighting conditions, the input to the neural network is expected to have wide variations of various

256 attributes.257 Importantly, these variations introduced by the environment are typically not expected to change

258 the neural network’s output. The robustness of the neural network can then be verified against

259 changes to such environmental conditions by verifying its robustness against relevant proxy

260 specifications within the domain of use of the neural network.261 Robustness properties can be local or global [10]. It is more common to verify local robustness

262 properties than global robustness properties, as the former are easier to specify. Local robustness

263 properties are specified with respect to a sample input from the test dataset. For example, given an

264 image correctly classified as a car, the local robustness property can specify that all images

265 generated by rotating the original image within 5 degrees are also classified as a car. A drawback

266 of verifying local robustness properties is that the guarantees are local to the provided test sample

267 and do not extend to other samples in the dataset. In contrast, global robustness properties define

268 guarantees that hold deterministically over all possible inputs [11]. For domains where input

269 features have semantic meaning, for example, air traffic collision avoidance systems, the global

270 properties can be specified by defining valid input values for the input features expected in a real-

271 world deployment. Defining meaningful input values is more challenging in settings where the

272 individual features have no semantic meaning.© ISO 2022 – All rights reserved 3

---------------------- Page: 9 ----------------------

ISO/IEC 24029-2:2022

273 5.2 Notion of domain

274 Most AI systems, including artificial neural networks, are intended to operate in a particular

275 environment where their performance characteristics can be defined and evaluated (typical

276 metrics of evaluation can be found in Table 1 of ISO/IEC TR 24029-1:2021 [3]). Robustness, being

277 one of the key performance characteristics, is inseparable from the domain where a neural network

278 is operating. The existence of a bounded domain is implicit in many neural network applications

279 (e.g. image classification expects images of certain quality and in a certain format).

280 The agent paradigm shown in Figure 1 drawn from ISO/IEC 22989:— postulates that an agent

281 senses its environment and acts on this environment towards achieving certain goals. The distinct

282 concepts AI Agent and environment are emphasized in this paradigm. The notion of domain

283 captures the limitations of current technology where a neural network, being a particular type of

284 AI agent, is technically capable of achieving its goal only if it is operating on appropriate inputs. An

285 example is a neural network operating in an environment where all relevant features and qualities

286 for its goal have been taken into consideration in the design, training and deployment.

actionsgoal

agent environment

sensing

287

288 Figure 1 — The agent paradigm

289 The definition rests on the following pillars:

290 — to be of practical use, a domain needs to be determined by a set of attributes which are clearly

291 defined;292 — the specification of domain should be sufficient for the AI system to conduct one or more given

293 tasks as intended;294 — data used for training should be representative of data expected to be used for inference.

295 Establishing a domain involves specifying all data attributes essential for the neural network to be

296 capable of achieving its goal.297 Several popular domains of application of neural networks cover applications in vision, speech

298 processing and robotics. To describe these domains, and more importantly their variability, the

299 attributes used are generally numerical in essence. For example, the shape of an object in an image,

300 the intensity of some pixels or the amplitude of an audio signal.301 However, there are other domains that can be expressed through non-numerical attributes. natural

302 language processing (NLP), BigCode (the use of automatically learning from existing code) and

303 graphs are examples of such domains. In these cases, the attributes can be non-numerical, for

304 example, the words in a sentence or the edges in a graph.305 The attributes allow the user to morph one instance in the domain to another instance and should

306 be bounded in the robustness specification.4 © ISO 2022 – All rights reserved

---------------------- Page: 10 ----------------------

ISO/IEC 24029-2:2022

307 5.3 Stability

308 5.3.1 Stability property

309 A stability property expresses the extent to which a neural network output remains the same when

310 its inputs vary over a specific domain. Checking the stability over a domain where the behaviour is

311 supposed to hold allows for checking whether or not the performance will hold too. A stability

312 property can be expressed either in a closed-end form (e.g. “is the variation under this threshold?”)

313 or an open-ended form (e.g. “what is the largest stable domain?”).314 In order to prove that a neural network remains performant in the presence of noisy inputs, a

315 stability property shall be expressed. A stability property should only be used on domains of uses

316 which, in terms of expected behaviour, present some regularity properties. It should not be used

317 on a chaotic system, for example, as it will not be relevant. When the regularity of the domain is not

318 easy to affirm (e.g. chaotic system), it can still be useful to use the stability property to compare

319 neural networks.320 5.3.2 Stability criterion

...

Questions, Comments and Discussion

Ask us and Technical Secretary will try to provide an answer. You can facilitate discussion about the standard in here.