ASTM D6233-98

(Guide)Standard Guide for Data Assessment for Environmental Waste Management Activities

Standard Guide for Data Assessment for Environmental Waste Management Activities

SCOPE

1.1 This guide covers a practical strategy for examining an environmental project data collection effort and the resulting data to determine conformance with the project plan and impact on data usability. This guide also leads the user through a logical sequence to determine which statistical protocols should be applied to the data.

General Information

Relations

Standards Content (Sample)

NOTICE: This standard has either been superceded and replaced by a new version or discontinued.

Contact ASTM International (www.astm.org) for the latest information.

Designation: D 6233 – 98

Standard Guide for

Data Assessment for Environmental Waste Management

Activities

This standard is issued under the fixed designation D 6233; the number immediately following the designation indicates the year of

original adoption or, in the case of revision, the year of last revision. A number in parentheses indicates the year of last reapproval. A

superscript epsilon (e) indicates an editorial change since the last revision or reapproval.

1. Scope 3. Terminology

1.1 This guide covers a practical strategy for examining an 3.1 Definitions of Terms Specific to This Standard:

environmental project data collection effort and the resulting 3.1.1 bias, n—a systematic error that is consistently nega-

data to determine if they will support the intended use. It tive or consistently positive.

covers the review of project activities to determine conform- 3.1.2 characteristic, n—a property of items in a sample or

ance with the project plan and impact on data usability. This population which can be measured, counted, or otherwise

guide also leads the user through a logical sequence to observed.

determine which statistical protocols should be applied to the 3.1.3 composite sample, n—a physical combination of two

data. or more samples.

1.1.1 This guide does not establish criteria for the accep- 3.1.4 confidence limit, n—an upper and/or lower limit(s)

tance or use of data but instructs the assessor/user to use the within which the true value is likely to be contained with a

criteria established by the project team during the planning stated probability or confidence.

(data quality objective process), and optimization and imple- 3.1.5 continuous data, n—data where the values of the

mentation (sampling and analysis plan) process. individual samples may vary from minus infinity to plus

1.2 This standard does not purport to address all of the infinity.

safety concerns, if any, associated with its use. It is the 3.1.6 data quality objectives (DQOs), n—DQOs are quali-

responsibility of the user of this standard to establish appro- tative and quantitative statements derived from the DQO

priate safety and health practices and determine the applica- process describing the decision rules and the uncertainties of

bility of regulatory limitations prior to use. the decision(s) within the context of the problem(s).

3.1.7 data quality objective process, n—a quality manage-

2. Referenced Documents

ment tool based on the scientific method and developed to

2.1 ASTM Standards:

facilitate the planning of environmental data collection activi-

D 4687 Guide for General Planning of Waste Sampling ties.

D 5088 Practice for Decontamination of Field Equipment

3.1.8 discrete data, n—data made up of sample results that

Used at Nonradioactive Waste Sites are expressed as a simple pass/fail, yes/no, or positive/

D 5283 Practice for Generation of Environmental Data negative.

Related to Waste Management Activities: Quality Assur-

3.1.9 heterogeneity, n—the condition of the population

ance and Quality Control Planning and Implementation under which all items of the population are not identical with

Activities

respect to the parameter of interest.

D 5792 Practice for Generation of Environmental Data 3.1.10 homogeneity, n—the condition of the population

Related to Waste Management Activities: Development of

under which all items of the population are identical with

Data Quality Objectives respect to the parameter of interest.

D 5956 Guide for Sampling Strategies for Heterogeneous

3.1.11 population, n—the totality of items or units under

Wastes consideration.

D 6044 Guide for Representative Sampling for Manage-

3.1.12 representative sample, n—a sample collected in such

ment of Waste and Contaminated Media a manner that it reflects one or more characteristics of interest

(as defined by the project objectives) of a population from

which it is collected.

3.1.13 sample, n—a portion of material which is taken from

This guide is under the jurisdiction of ASTM Committee D34 on Waste

Management and is the direct responsibility of Subcommittee D34.01.01 on a larger quantity for the purpose of estimating properties or

Planning for Sampling.

composition of the larger quantity.

Current edition approved Feb. 10, 1998. Published June 1998.

3.1.14 sampling design error, n—error which results from

Annual Book of ASTM Standards, Vol 11.04.

the unavoidable limitations faced when media with inherently

Annual Book of ASTM Standards, Vol 04.09.

Copyright © ASTM, 100 Barr Harbor Drive, West Conshohocken, PA 19428-2959, United States.

NOTICE: This standard has either been superceded and replaced by a new version or discontinued.

Contact ASTM International (www.astm.org) for the latest information.

D 6233

TABLE 1 Information Needed to Evaluate the Integrity of the

variable qualities are measured and incorrect judgement on the

Environmental Sample Collection and Analysis Process

part of the project team.

General Project Details • Site History

3.1.15 subsample, n—a portion of a sample that is taken for

• Process Description

testing or for record purposes.

• Waste Generation Records

• Waste Handling/Disposal Practices

4. Significance and Use

• Sources of Contamination

• Conceptual Site Model

4.1 This guide presents a logical process for determining the

• Potential Contaminants of Concern

usability of environmental data for decision making activities.

• Fate and Transport Mechanisms

• Exposure Pathways

The process describes a series of steps to determine if the

• Boundaries of the Study Area

enviromental data were collected as planned by the project

• Adjacent Properties

team and to determine if the a priori expectations/assumptions

Sampling Issues • Sampling Strategy

of the team were met.

• Sample Location

4.2 This guide identifies the technical issues pertinent to the

• Sample Number

integrity of the environmental sample collection and analysis

• Sample Matrix

• Sample Volume/Mass

process. It guides the data assessor and data user about the

• Discrete/Composite Samples

appropriate action to take when data fail to meet acceptable

• Sample Representativeness

standards of quality and reliability.

Sampling Equipment, Containers and

•

Preservatives

4.3 The guide discusses, in practical terms, the proper

application of statistical procedures to evaluate the database. It

Analytical Issues • Laboratory Sub-sampling

emphasizes the major issues to be considered and provides

• Sample Preparation Methods

• Analytical Method

references to more thorough statistical treatments for those

• Detection Limits

users involved in detailed statistical assessments.

• Matrix Interferences

4.4 This guide is intended for those who are responsible for • Bias

• Holding Times

making decisions about environmental waste management

• Calibration

projects.

• Quality Control Results

• Contamination

5. General Considerations

• Reporting Requirements

• Reagents/Supplies

5.1 This guide provides general guidance about applying

numerical and other techniques to the assessment of data Validation and

• Data Quality Objectives

Assessment

resulting form environmental data collection activities associ-

• Chain of Custody

ated with waste management activities.

• Action Level

5.2 The environmental measurement process is a complex

• Completeness

• Laboratory Audit Results

process requiring input from a variety of personnel to properly

• Field and Laboratory Records

address the numerous issues related to the integrity of the

• Level of Uncertainty in Reported Values

sample collection and measurement process in sufficient detail.

Table 1 lists many of the topics that are common to most

environmental projects. A well-executed project planning ac-

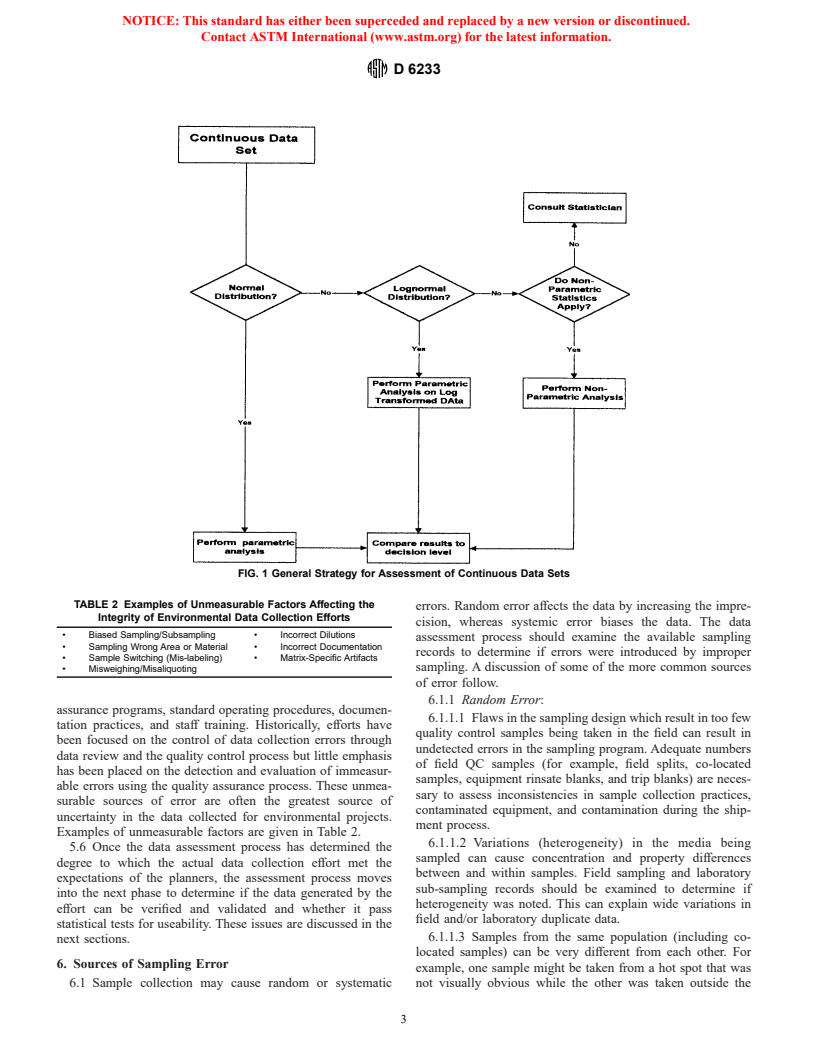

tivity (see Guide D 4687, Practices D 5088, D 5283, and implemented, they result in data sets (see Fig. 1) that are

D 5792) should consider the impact of each of these issues on generated by in-control processes or out-of control processes

the reliability of the final project decision. The data assessment that were not amenable to corrective action but whose details

process must then evaluate the actual performance in these are explained by the project staff conducting the work. Good

areas versus that expected by the project planners. Significant QC programs lead to reliable data that are seldom called into

deviations from the a priori performance level of any one or question during the assessment process. However, in cases

combination of these issues may impact the reliability of the where the absence of staff responsibility or authority to

project decision and necessitate a reconsideration of the self-monitor and correct deficiencies at the working level is

missing, the burden of assuring data integrity is placed on the

decision criteria by the project decision makers.

5.3 Appropriate professionals must assess the project plan- quality assurance (QA) function. The data assessment process

must determine the location (working level or QA level) where

ning documents and completed project records to determine if

the project findings match the conceptual model and decision effective quality control occurs (detection of error and execu-

tion of corrective action) in the data collection process and

logic. In areas where the findings don’t match, the assessors

must document and report their findings and, if possible, the focus on how well the QC function was executed. As a general

potential impact on the decision process. Items subject to rule, if the QC function is not executed in real-time and

numerical confirmation are compared to the project plan and thoroughly documented by the staff performing the work, the

any discrepancies and their potential impact noted. more likely the data assessor will be to find questionable data.

5.4 Effective quality control (QC) programs are those that 5.5 In addition to addressing the issues listed in Table 1, the

empower the individuals performing the work to evaluate their data assessment process must search for unmeasurable factors

performance and implement real-time corrections during the whose impact cannot be detected by the review of the project

sampling or measurement process, or both. When quality records against expectations or numerical techniques. These

control processes (including documentation) are properly are the types of things that are controlled by effective quality

NOTICE: This standard has either been superceded and replaced by a new version or discontinued.

Contact ASTM International (www.astm.org) for the latest information.

D 6233

FIG. 1 General Strategy for Assessment of Continuous Data Sets

TABLE 2 Examples of Unmeasurable Factors Affecting the

errors. Random error affects the data by increasing the impre-

Integrity of Environmental Data Collection Efforts

cision, whereas systemic error biases the data. The data

• Biased Sampling/Subsampling • Incorrect Dilutions

assessment process should examine the available sampling

• Sampling Wrong Area or Material • Incorrect Documentation

records to determine if errors were introduced by improper

• Sample Switching (Mis-labeling) • Matrix-Specific Artifacts

sampling. A discussion of some of the more common sources

• Misweighing/Misaliquoting

of error follow.

6.1.1 Random Error:

assurance programs, standard operating procedures, documen-

6.1.1.1 Flaws in the sampling design which result in too few

tation practices, and staff training. Historically, efforts have

quality control samples being taken in the field can result in

been focused on the control of data collection errors through

undetected errors in the sampling program. Adequate numbers

data review and the quality control process but little emphasis

of field QC samples (for example, field splits, co-located

has been placed on the detection and evaluation of immeasur-

samples, equipment rinsate blanks, and trip blanks) are neces-

able errors using the quality assurance process. These unmea-

sary to assess inconsistencies in sample collection practices,

surable sources of error are often the greatest source of

contaminated equipment, and contamination during the ship-

uncertainty in the data collected for environmental projects.

ment process.

Examples of unmeasurable factors are given in Table 2.

6.1.1.2 Variations (heterogeneity) in the media being

5.6 Once the data assessment process has determined the

sampled can cause concentration and property differences

degree to which the actual data collection effort met the

between and within samples. Field sampling and laboratory

expectations of the planners, the assessment process moves

sub-sampling records should be examined to determine if

into the next phase to determine if the data generated by the

heterogeneity was noted. This can explain wide variations in

effort can be verified and validated and whether it pass

field and/or laboratory duplicate data.

statistical tests for useability. These issues are discussed in the

6.1.1.3 Samples from the same population (including co-

next sections.

located samples) can be very different from each other. For

6. Sources of Sampling Error

example, one sample might be taken from a hot spot that was

6.1 Sample collection may cause random or systematic not visually obvious while the other was taken outside the

NOTICE: This standard has either been superceded and replaced by a new version or discontinued.

Contact ASTM International (www.astm.org) for the latest information.

D 6233

perimeter of the hot spot. If data from areas of high concen- context of the sampling activity. Such assessments add mate-

tration is contained in data sets consisting primarily of uncon- rially to the usability of the data.

taminated material, statistical outlier analysis might suggest the

sample data should be omitted from consideration when 7. Sources of Analytical Error

evaluating a site. This can cause serious decision errors. Prior

7.1 Variation in the analytical process may cause random or

to declaring the data point(s) to be outliers, it is important for

systematic error. Random error affects the data by increasing

the assessor to examine the QC records from the analysis

the imprecision, whereas systematic error increases the bias of

yielding the suspect data. If the QC data indicates the system

the data. The data assessment process should examine the

was in control and review of the raw sample data reveals no

available analytical records to determine if errors were intro-

handling or calculation errors, the suspect data should be

duced in the data by the analytical process. Analytical results

discussed in the assessor’s report but it should not be dis-

can also be impacted by sample matrix effects. Discussion of

co

...

Questions, Comments and Discussion

Ask us and Technical Secretary will try to provide an answer. You can facilitate discussion about the standard in here.