ASTM E2164-01(2007)

(Test Method)Standard Test Method for Directional Difference Test

Standard Test Method for Directional Difference Test

SIGNIFICANCE AND USE

The directional difference test determines with a given confidence level whether or not there is a perceivable difference in the intensity of a specified attribute between two samples, for example, when a change is made in an ingredient, a process, packaging, handling, or storage.

The directional difference test is inappropriate when evaluating products with sensory characteristics that are not easily specified, not commonly understood, or not known in advance. Other difference test methods such as the same-different test should be used.

A result of no significant difference in a specific attribute does not ensure that there are no differences between the two samples in other attributes or characteristics, nor does it indicate that the attribute is the same for both samples. It may merely indicate that the degree of difference is too low to be detected with the sensitivity (α, β, and Pmax) chosen for the test.

The method itself does not change whether the purpose of the test is to determine that two samples are perceivably different versus that the samples are not perceivably different. Only the selected values of Pmax, α, and β change. If the objective of the test is to determine if the two samples are perceivably different, then the value selected for α is typically smaller than the value selected for β. If the objective is to determine if no perceivable difference exists, then the value selected for β is typically smaller than the value selected for α and the value of Pmax needs to be stated explicitly.

SCOPE

1.1 This test method covers a procedure for comparing two products using a two-alternative forced-choice task.

1.2 This method is sometimes referred to as a paired comparison test or as a 2-AFC (alternative forced choice) test.

1.3 A directional difference test determines whether a difference exists in the perceived intensity of a specified sensory attribute between two samples.

1.4 Directional difference testing is limited in its application to a specified sensory attribute and does not directly determine the magnitude of the difference for that specific attribute. Assessors must be able to recognize and understand the specified attribute. A lack of difference in the specified attribute does not imply that no overall difference exists.

1.5 This test method does not address preference.

1.6 A directional difference test is a simple task for assessors, and is used when sensory fatigue or carryover is a concern. The directional difference test does not exhibit the same level of fatigue, carryover, or adaptation as multiple sample tests such as triangle or duo-trio tests. For detail on comparisons among the various difference tests, see referencess. (1), (2), and (3).

1.7 The procedure of the test described in this document consists of presenting a single pair of samples to the assessors.

1.8 This standard does not purport to address all of the safety concerns, if any, associated with its use. It is the responsibility of the user of this standard to establish appropriate safety and health practices and determine the applicability of regulatory limitations prior to use.

General Information

Relations

Standards Content (Sample)

NOTICE: This standard has either been superseded and replaced by a new version or withdrawn.

Contact ASTM International (www.astm.org) for the latest information

Designation: E 2164 – 01 (Reapproved 2007)

Standard Test Method for

Directional Difference Test

This standard is issued under the fixed designation E 2164; the number immediately following the designation indicates the year of

original adoption or, in the case of revision, the year of last revision. A number in parentheses indicates the year of last reapproval. A

superscript epsilon (e) indicates an editorial change since the last revision or reapproval.

1. Scope E 253 Terminology Relating to Sensory Evaluation of Ma-

terials and Products

1.1 This test method covers a procedure for comparing two

E 456 Terminology Relating to Quality and Statistics

products using a two-alternative forced-choice task.

E 1871 Guide for Serving Protocol for Sensory Evaluation

1.2 This method is sometimes referred to as a paired

of Foods and Beverages

comparison test or as a 2-AFC (alternative forced choice) test.

2.2 ASTM Publications:

1.3 A directional difference test determines whether a dif-

Manual 26 Sensory Testing Methods, 2nd Edition

ference exists in the perceived intensity of a specified sensory

STP 758 Guidelines for the Selection and Training of

attribute between two samples.

Sensory Panel Members

1.4 Directionaldifferencetestingislimitedinitsapplication

STP 913 Guidelines. Physical Requirements for Sensory

to a specified sensory attribute and does not directly determine

Evaluation Laboratories

the magnitude of the difference for that specific attribute.

2.3 ISO Standard:

Assessors must be able to recognize and understand the

ISO 5495 Sensory Analysis—Methodology—Paired Com-

specifiedattribute.Alackofdifferenceinthespecifiedattribute

parison

does not imply that no overall difference exists.

1.5 This test method does not address preference.

3. Terminology

1.6 A directional difference test is a simple task for asses-

3.1 For definition of terms relating to sensory analysis, see

sors, and is used when sensory fatigue or carryover is a

Terminology E 253, and for terms relating to statistics, see

concern. The directional difference test does not exhibit the

Terminology E 456.

same level of fatigue, carryover, or adaptation as multiple

3.2 Definitions of Terms Specific to This Standard:

sample tests such as triangle or duo-trio tests. For detail on

3.2.1 a (alpha) risk—the probability of concluding that a

comparisons among the various difference tests, see referen-

2 perceptibledifferenceexistswhen,inreality,onedoesnot(also

cess. (1), (2), and (3).

known as type I error or significance level).

1.7 The procedure of the test described in this document

3.2.2 b (beta) risk—the probability of concluding that no

consists of presenting a single pair of samples to the assessors.

perceptible difference exists when, in reality, one does (also

1.8 This standard does not purport to address all of the

known as type II error).

safety concerns, if any, associated with its use. It is the

3.2.3 one-sided test—a test in which the researcher has an a

responsibility of the user of this standard to establish appro-

priori expectation concerning the direction of the difference. In

priate safety and health practices and determine the applica-

this case, the alternative hypothesis will express that the

bility of regulatory limitations prior to use.

perceived intensity of the specified sensory attribute is greater

2. Referenced Documents (that is,A>B) (or lower (that is,A

3 the other.

2.1 ASTM Standards:

3.2.4 two-sided test—a test in which the researcher does not

have any a priori expectation concerning the direction of the

difference. In this case, the alternative hypothesis will express

This test method is under the jurisdiction ofASTM Committee E18 on Sensory

Evaluation and is the direct responsibility of Subcommittee E18.04 on Fundamen- that the perceived intensity of the specified sensory attribute is

tals of Sensory.

different from one product to the other (that is, AfiB).

Current edition approved Sept. 1, 2007. Published January 2008. Originally

3.2.5 common responses—for a one-sided test, the number

approved in 2001. Last previous edition approved in 2001 as E 2164 – 01.

of assessors selecting the sample expected to have a higher

The boldface numbers in parentheses refer to the list of references at the end of

this standard.

intensity of the specified sensory attribute. Common responses

For referenced ASTM standards, visit the ASTM website, www.astm.org, or

couldalsobedefinedintermsoflowerintensityoftheattribute

contact ASTM Customer Service at service@astm.org. For Annual Book of ASTM

if it is more relevant. For a two-sided test, the larger number of

Standards volume information, refer to the standard’s Document Summary page on

the ASTM website. assessors selecting sample A or B.

Copyright © ASTM International, 100 Barr Harbor Drive, PO Box C700, West Conshohocken, PA 19428-2959, United States.

E 2164 – 01 (2007)

3.2.6 P —Atest sensitivity parameter established prior to 5.3.1 The method itself does not change whether the pur-

max

testing and used along with the selected values of a and b to pose of the test is to determine that two samples are perceiv-

determine the number of assessors needed in a study. P is ably different versus that the samples are not perceivably

max

the proportion of common responses that the researcher wants different. Only the selected values of P , a, and b change. If

max

the test to be able to detect with a probability of 1 b. For the objective of the test is to determine if the two samples are

example, if a researcher wants to be 90 % confident of perceivably different, then the value selected for a is typically

detecting a 60:40 split in a directional difference test, then smaller than the value selected for b. If the objective is to

P = 60% and b = 0.10. P is relative to a population of determine if no perceivable difference exists, then the value

max max

judges that has to be defined based on the characteristics of the selected for b is typically smaller than the value selected for a

panel used for the test. For instance, if the panel consists of and the value of P needs to be stated explicitly.

max

trainedassessors,P willberepresentativeofapopulationof

max

6. Apparatus

trained assessors, but not of consumers.

3.2.7 P —the proportion of common responses that is 6.1 Carry out the test under conditions that prevent contact

c

between assessors until the evaluations have been completed,

calculated from the test data.

for example, booths that comply with STP 913.

3.2.8 product—the material to be evaluated.

6.2 Sample preparation and serving sizes should comply

3.2.9 sample—the unit of product prepared, presented, and

with Guide E 1871, or see Refs. (4) or (5).

evaluated in the test.

3.2.10 sensitivity—a general term used to summarize the

7. Assessors

performance characteristics of the test. The sensitivity of the

7.1 All assessors must be familiar with the mechanics of the

test is rigorously defined, in statistical terms, by the values

directional difference test (format, task, and procedure of

selected for a, b, and P .

max

evaluation). For directional difference testing, assessors must

be able to recognize and quantify the specified attribute.

4. Summary of Test Method

7.2 The characteristics of the assessors used define the

4.1 Clearly define the test objective in writing.

scope of the conclusions. Experience and familiarity with the

4.2 Choose the number of assessors based on the sensitivity

product or the attribute may increase the sensitivity of an

desired for the test. The sensitivity of the test is, in part, a

assessor and may therefore increase the likelihood of finding a

function of two competing risks—the risk of declaring a

significant difference. Monitoring the performance of assessors

difference in the attribute when there is none (that is, a-risk)

over time may be useful for selecting assessors with increased

and the risk of not declaring a difference in the attribute when

sensitivity. Consumers can be used, as long as they are familiar

there is one (that is, b-risk).Acceptable values of a and b vary

with the format of the directional difference test. If a sufficient

depending on the test objective. The values should be agreed

number of employees are available for this test, they too can

upon by all parties affected by the results of the test.

serve as assessors. If trained descriptive assessors are used,

4.3 In directional difference testing, assessors receive a pair

there should be sufficient numbers of them to meet the

of coded samples and are informed of the attribute to be

agreed-upon risks appropriate to the project. Mixing the types

evaluated.The assessors report which they believe to be higher

of assessors is not recommended, given the potential differ-

or lower in intensity of the specified attribute, even if the

ences in sensitivity of each type of assessor.

selection is based only on a guess.

7.3 The degree of training for directional difference testing

4.4 Results are tallied and significance determined by direct

should be addressed prior to test execution. Attribute-specific

calculation or reference to a statistical table.

training may include a preliminary presentation of differing

levels of the attribute, either shown external to the product or

5. Significance and Use

shown within the product, for example, as a solution or within

5.1 The directional difference test determines with a given

a product formulation. If the test concerns the detection of a

confidence level whether or not there is a perceivable differ-

particular taint, consider the inclusion of samples during

ence in the intensity of a specified attribute between two

training that demonstrate its presence and absence. Such

samples, for example, when a change is made in an ingredient,

demonstration will increase the assessors’ acuity for the taint

a process, packaging, handling, or storage.

(see STP 758 for details). Allow adequate time between the

5.2 The directional difference test is inappropriate when

exposure to the training samples and the actual test to avoid

evaluating products with sensory characteristics that are not

carryover or fatigue.

easily specified, not commonly understood, or not known in 7.4 During the test sessions, avoid giving information about

advance. Other difference test methods such as the same-

product identity, expected treatment effects, or individual

different test should be used.

performance until all testing is comple.

5.3 Aresultofnosignificantdifferenceinaspecificattribute

8. Number of Assessors

does not ensure that there are no differences between the two

samples in other attributes or characteristics, nor does it 8.1 Choose the number of assessors to yield the sensitivity

indicate that the attribute is the same for both samples. It may called for by the test objectives. The sensitivity of the test is a

merely indicate that the degree of difference is too low to be function of four factors: a-risk, b-risk, maximum allowable

detected with the sensitivity (a, b, and P ) chosen for the proportionofcommonresponses(P ),andwhetherthetestis

max max

test. one-sided or two-sided.

E 2164 – 01 (2007)

8.2 Prior to conducting the test, decide if the test is 8.2.3 When testing for similarity, for example, when the

one-sided or two-sided and select values for a, b, and P . researcher wants to take only a small chance of missing a

max

The following can be considered as general guidelines: difference that is there, the most commonly used values for

8.2.1 One-sided versus two-sided: The test is one-sided if a-risk and b-risk are a = 0.20 and b = 0.05. These values can

only one direction of difference is critical to the findings. For be adjusted on a case-by-case basis to reflect the sensitivity

example, the test is one-sided if the objective is to confirm that desired vs. the number of assessors available. When testing for

the sample with more sugar is sweeter than the sample with similaritywithalimitednumberofassessors,holdthe b-riskat

less sugar. The test is two-sided if both possible directions of a relatively small value and allow the a-risk to increase in

difference are important. For example, the test is two-sided if order to control the risk of missing a difference that is present.

the objective of the test is to determine which of two samples 8.2.4 For P , the proportion of common responses falls

max

is sweeter. into three ranges:

8.2.2 When testing for a difference, for example, when the

P < 55 % represents “small” values;

max

55 %# P # 65 % represents “medium-sized” values; and

researcherwantstotakeonlyasmallchanceofconcludingthat max

P > 65 % represents “large” values.

max

a difference exists when it does not, the most commonly used

values for a-risk and b-risk are a = 0.05 and b = 0.20. These 8.3 Having defined the required sensitivity for the test using

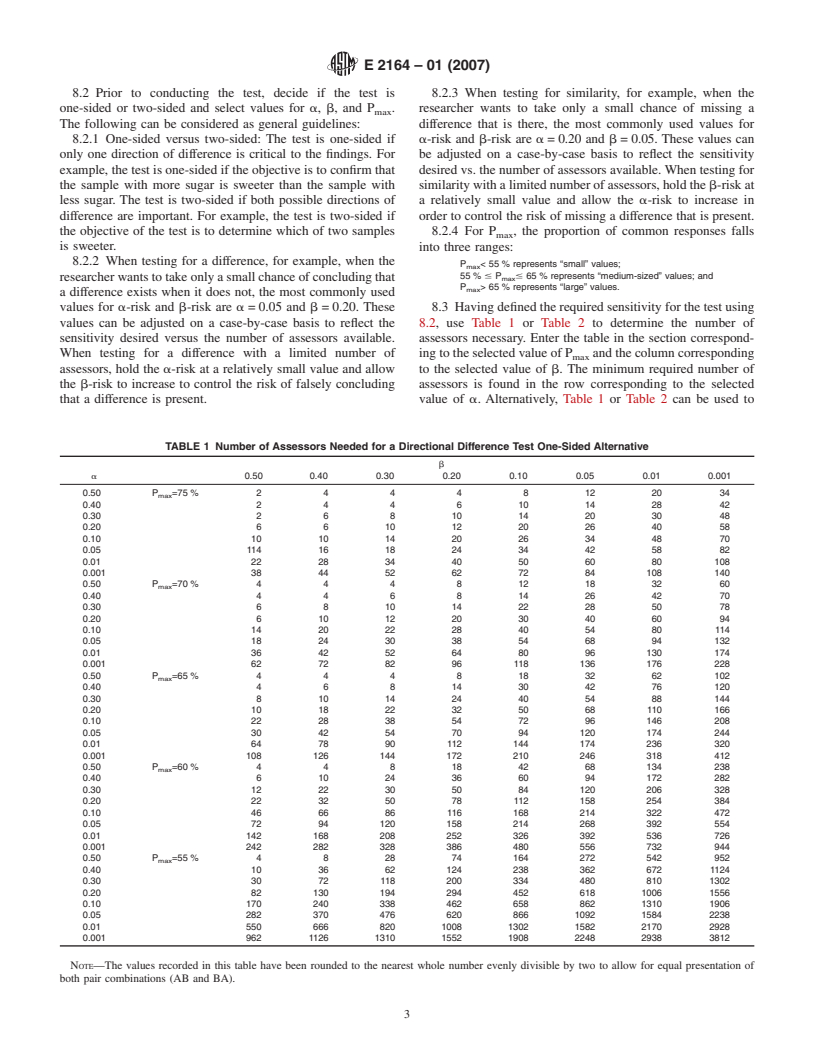

values can be adjusted on a case-by-case basis to reflect the 8.2, use Table 1 or Table 2 to determine the number of

sensitivity desired versus the number of assessors available. assessors necessary. Enter the table in the section correspond-

When testing for a difference with a limited number of ing to the selected value of P and the column corresponding

max

assessors, hold the a-risk at a relatively small value and allow to the selected value of b. The minimum required number of

the b-risk to increase to control the risk of falsely concluding assessors is found in the row corresponding to the selected

that a difference is present. value of a. Alternatively, Table 1 or Table 2 can be used to

TABLE 1 Number of Assessors Needed for a Directional Difference Test One-Sided Alternative

b

a 0.50 0.40 0.30 0.20 0.10 0.05 0.01 0.001

0.50 P =75 % 2 4 4 4 8 12 20 34

max

0.40 2 4 4 6 10 14 28 42

0.30 2 6 810142030 48

0.20 6 6 10 12 20 26 40 58

0.10 10 10 14 20 26 34 48 70

0.05 114 161824344258 82

0.01 22 28 34 40 50 60 80 108

0.001 38 44 52 62 72 84 108 140

0.50 P=70% 4 4 4 8121832 60

max

0.40 4 4 6 8 14 26 42 70

0.30 6 8 10 14 22 28 50 78

0.20 6 101220304060 94

0.10 14 20 22 28 40 54 80 114

0.05 18 24 30 38 54 68 94 132

0.01 36 42 52 64 80 96 130 174

0.001 62 72 82 96 118 136 176 228

0.50 P =65 % 4 4 4 8 18 32 62 102

max

0.40 4 6 8 14 30 42 76 120

0.30 8 101424405488 144

0.20 10 18 22 32 50 68 110 166

0.10 22 28 38 54 72 96 146 208

0.05 30 42 54 70 94 120 174 244

0.01 64 78 90 112 144 174 236 320

0.001 108 126 144 172 210 246 318 412

0.50 P =60 % 4 4 8 18 42 68 134 238

max

0.40 6 10 24 36 60 94 172 282

0.30 12 22 30 50 84 120 206 328

0.20 22 32 50 78 112 158 254 384

0.10 46 66 86 116 168 214 322 472

0.05 72 94 120 158 214 268 392 554

0.01 142 168 208 252 326 392 536 726

0.001 242 282 328 386 480 556 732 944

0.50 P =55 % 4 8 28 74 164 272 542 952

max

0.40 10 36 62 124 238 362 672

...

Questions, Comments and Discussion

Ask us and Technical Secretary will try to provide an answer. You can facilitate discussion about the standard in here.