ASTM E2737-10

(Practice)Standard Practice for Digital Detector Array Performance Evaluation and Long-Term Stability

Standard Practice for Digital Detector Array Performance Evaluation and Long-Term Stability

SIGNIFICANCE AND USE

This practice is intended to be used by the NDT using organization to measure the baseline performance of the DDA and to monitor its performance throughout its service as an NDT imaging system.

It is to be understood that the DDA has already been selected and purchased by the user from a manufacturer based on the inspection needs at hand. This practice is not intended to be used as an “acceptance test” of the DDA, but rather to establish a performance baseline that will enable periodic performance tracking while in-service.

Although many of the properties listed in this standard have similar metrics to those found in Practice E2597, data collection methods are not identical, and comparisons among values acquired with each standard should not be made.

This practice defines the tests to be performed and required intervals. Also defined are the methods of tabulating results that DDA users will complete following initial baselining of the DDA system. These tests will also be performed periodically at the stated required intervals to evaluate the DDA system to determine if the system remains within acceptable operational limits as established in this practice or defined between user and customer (CEO).

There are several factors that affect the quality of a DDA image including the spatial resolution, geometrical unsharpness, scatter, signal to noise ratio, contrast sensitivity (contrast/noise ratio), image lag, and burn in. There are several additional factors and settings (for example, integration time, detector parameters or imaging software), which affect these results. Additionally, calibration techniques may also have an impact on the quality of the image. This practice delineates tests for each of the properties listed herein and establishes standard techniques for assuring repeatability throughout the lifecycle testing of the DDA.

SCOPE

1.1 This practice describes the evaluation of DDA systems for industrial radiology. It is intended to ensure that the evaluation of image quality, as far as this is influenced by the DDA system, meets the needs of users, and their customers, and enables process control and long term stability of the DDA system.

1.2 This practice specifies the fundamental parameters of Digital Detector Array (DDA) systems to be measured to determine baseline performance, and to track the long term stability of the DDA system.

1.3 The DDA system performance tests specified in this practice shall be completed upon acceptance of the system from the manufacturer and at intervals specified in this practice to monitor long term stability of the system. The intent of these tests is to monitor the system performance for degradation and to identify when an action needs to be taken when the system degrades by a certain level.

1.4 The use of the gages provided in this standard is mandatory for each test. In the event these tests or gages are not sufficient, the user, in coordination with the cognizant engineering organization (CEO) may develop additional or modified tests, test objects, gages, or image quality indicators to evaluate the DDA system. Acceptance levels for these ALTERNATE tests shall be determined by agreement between the user, CEO and manufacturer.

1.5 This standard does not purport to address all of the safety concerns, if any, associated with its use. It is the responsibility of the user of this standard to establish appropriate safety and health practices and determine the applicability of regulatory limitations prior to use.

General Information

Relations

Standards Content (Sample)

NOTICE: This standard has either been superseded and replaced by a new version or withdrawn.

Contact ASTM International (www.astm.org) for the latest information

Designation: E2737 − 10

Standard Practice for

Digital Detector Array Performance Evaluation and Long-

Term Stability

This standard is issued under the fixed designation E2737; the number immediately following the designation indicates the year of

original adoption or, in the case of revision, the year of last revision. A number in parentheses indicates the year of last reapproval. A

superscript epsilon (´) indicates an editorial change since the last revision or reapproval.

1. Scope 2. Referenced Documents

1.1 This practice describes the evaluation of DDA systems 2.1 ASTM Standards:

for industrial radiology. It is intended to ensure that the E1025 Practice for Design, Manufacture, and Material

evaluation of image quality, as far as this is influenced by the Grouping Classification of Hole-Type Image Quality In-

DDA system, meets the needs of users, and their customers, dicators (IQI) Used for Radiology

and enables process control and long term stability of the DDA E1316 Terminology for Nondestructive Examinations

system. E1742 Practice for Radiographic Examination

E2002 Practice for DeterminingTotal Image Unsharpness in

1.2 This practice specifies the fundamental parameters of

Radiology

Digital Detector Array (DDA) systems to be measured to

E2445 Practice for Performance Evaluation and Long-Term

determine baseline performance, and to track the long term

Stability of Computed Radiography Systems

stability of the DDA system.

E2597 Practice for Manufacturing Characterization of Digi-

1.3 The DDA system performance tests specified in this

tal Detector Arrays

practice shall be completed upon acceptance of the system

E2698 Practice for Radiological Examination Using Digital

fromthemanufacturerandatintervalsspecifiedinthispractice

Detector Arrays

to monitor long term stability of the system.The intent of these

E2736 Guide for Digital Detector Array Radiology

tests is to monitor the system performance for degradation and

3. Terminology

to identify when an action needs to be taken when the system

degrades by a certain level.

3.1 Definitions—the definition of terms relating to gamma

1.4 The use of the gages provided in this standard is andX-radiology,whichappearinTerminologyE1316,Practice

mandatory for each test. In the event these tests or gages are E2597, Guide E2736, and Practice E2698 shall apply to the

not sufficient, the user, in coordination with the cognizant terms used in this practice.

engineering organization (CEO) may develop additional or

3.2 Definitions of Terms Specific to This Standard:

modified tests, test objects, gages, or image quality indicators

3.2.1 digital detector array (DDA) system—an electronic

to evaluate the DDA system. Acceptance levels for these

device that converts ionizing or penetrating radiation into a

ALTERNATE tests shall be determined by agreement between

discrete array of analog signals which are subsequently digi-

the user, CEO and manufacturer.

tized and transferred to a computer for display as a digital

1.5 This standard does not purport to address all of the

image corresponding to the radiologic energy pattern imparted

safety concerns, if any, associated with its use. It is the

upon the input region of the device. The conversion of the

responsibility of the user of this standard to establish appro-

ionizing or penetrating radiation into an electronic signal may

priate safety and health practices and determine the applica-

transpire by first converting the ionizing or penetrating radia-

bility of regulatory limitations prior to use.

tion into visible light through the use of a scintillating material.

1 2

This practice is under the jurisdiction of ASTM Committee E07 on Nonde- For referenced ASTM standards, visit the ASTM website, www.astm.org, or

structive Testing and is the direct responsibility of Subcommittee E07.01 on contact ASTM Customer Service at service@astm.org. For Annual Book of ASTM

Radiology (X and Gamma) Method. Standards volume information, refer to the standard’s Document Summary page on

Current edition approved April 15, 2010. Published June 2010. the ASTM website.

Copyright © ASTM International, 100 Barr Harbor Drive, PO Box C700, West Conshohocken, PA 19428-2959. United States

E2737 − 10

These devices can range in speed from many seconds per 3.2.19 saturationgrayvalue—themaximumpossibleusable

image to many images per second, up to and in excess of gray value of the DDA after offset correction.

real-time radioscopy rates (usually 30 frames per seconds).

NOTE 1—Saturation may occur because of a saturation of the pixel

3.2.2 active DDA area—the active pixelized region of the

itself, the amplifier, or digitizer, where the DDA encounters saturation

gray values as a function of increasing exposure levels.

DDA, which is recommended by the manufacturer as usable.

3.2.20 user—the user and operating organization of the

3.2.3 signal-to-noise ratio (SNR)—quotient of mean value

DDA system.

of the intensity (signal) and standard deviation of the intensity

(noise). The SNR depends on the radiation dose and the DDA

3.2.21 customer—the company, government agency, or

system properties.

other authority responsible for the design, or end user, of the

system or component for which radiologic examination is

3.2.4 contrast-to-noise ratio (CNR)—quotient of the differ-

required, also known as the CEO. In some industries, the

ence of the signal levels between two material thicknesses, and

customer is frequently referred to as the “Prime”.

standard deviation of the intensity (noise) of the base material.

The CNR depends on the radiation dose and the DDA system

3.2.22 manufacturer—DDA system manufacturer, supplier

properties.

for the user of the DDA system.

3.2.5 contrast sensitivity—recognized contrast percentage

4. Significance and Use

of the material to examine. It depends on 1/CNR.

4.1 This practice is intended to be used by the NDT using

3.2.6 spatial resolution (SR)—the spatial resolution indi-

organization to measure the baseline performance of the DDA

cates the smallest geometrical detail, which can be resolved

and to monitor its performance throughout its service as an

using the DDA with given geometrical magnification. It is the

NDT imaging system.

half of the value of the detector unsharpness divided by the

magnification factor of the geometrical setup and is similar to

4.2 It is to be understood that the DDA has already been

the effective pixel size.

selected and purchased by the user from a manufacturer based

3.2.7 material thickness range (MTR)—the wall thickness ontheinspectionneedsathand.Thispracticeisnotintendedto

be used as an “acceptance test” of the DDA, but rather to

range within one image of a DDA, whereby the thinner wall

establish a performance baseline that will enable periodic

thickness does not saturate the DDA and at the thicker wall

performance tracking while in-service.

thickness, the signal is significantly higher than the noise.

3.2.8 frame rate—number of frames acquired per second. 4.3 Although many of the properties listed in this standard

have similar metrics to those found in Practice E2597, data

3.2.9 lag—residual signal in the DDA that occurs shortly

collection methods are not identical, and comparisons among

after detector read-out and erasure.

values acquired with each standard should not be made.

3.2.10 burn-in—change in gain of the scintillator that per-

4.4 This practice defines the tests to be performed and

sists well beyond the exposure.

required intervals. Also defined are the methods of tabulating

3.2.11 bad pixel—a pixel identified with a performance

results that DDAusers will complete following initial baselin-

outside of the specification range for a pixel of a DDA as

ing of the DDA system. These tests will also be performed

defined in Practice E2597.

periodically at the stated required intervals to evaluate the

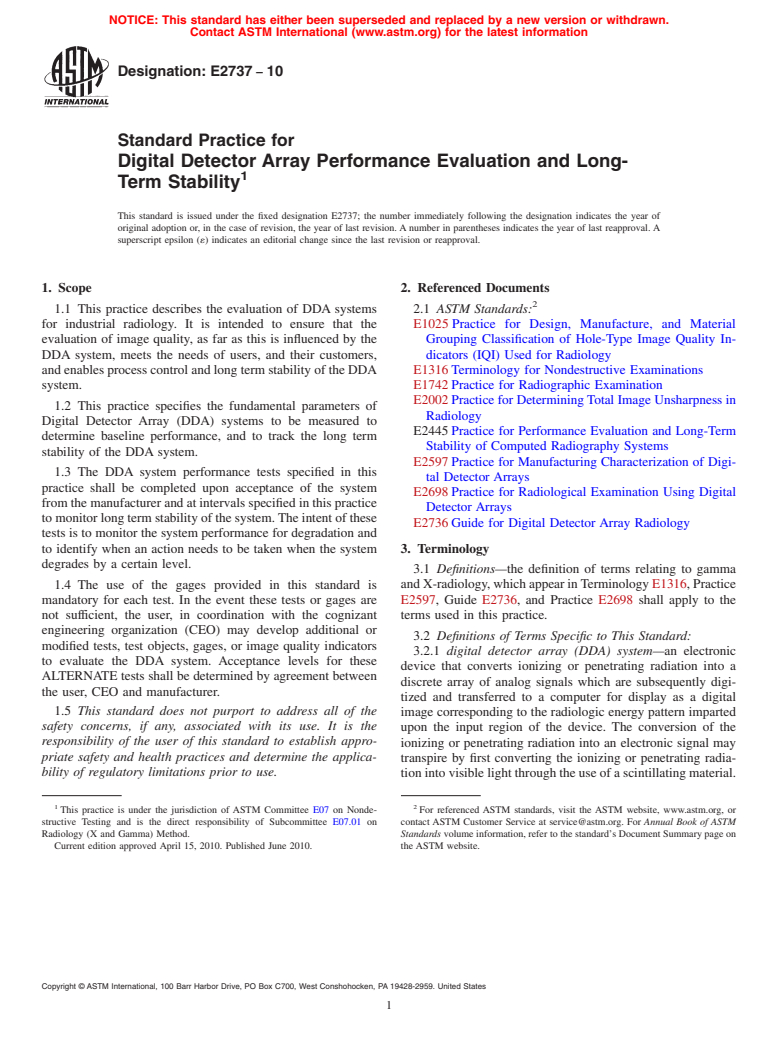

3.2.12 five-groove wedge—a continuous wedge with five

DDA system to determine if the system remains within

long grooves on one side (see Fig. 1).

acceptable operational limits as established in this practice or

3.2.13 phantom—a part or item being used to quantify DDA

defined between user and customer (CEO).

characterization metrics.

4.5 ThereareseveralfactorsthataffectthequalityofaDDA

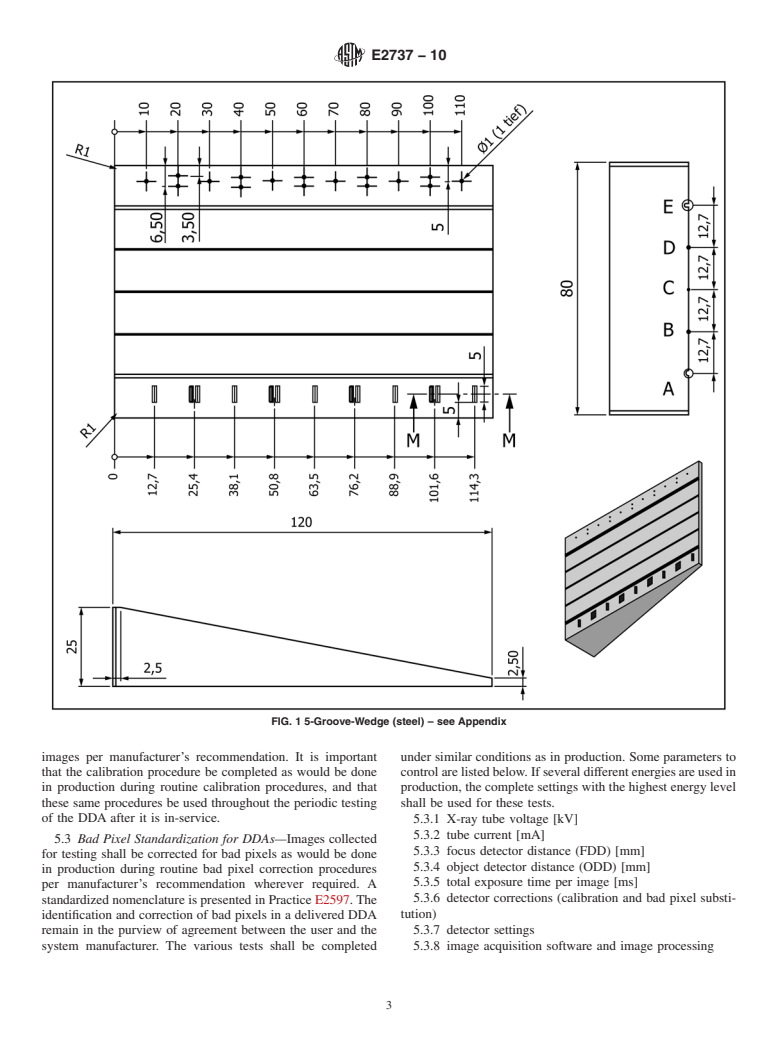

3.2.14 duplex plate phantom—two plates of the same mate-

image including the spatial resolution, geometrical

rial; Plate 2 has same size in x- and half the size in v- direction

unsharpness, scatter, signal to noise ratio, contrast sensitivity

of Plate 1; the thickness of Plate 1 matches the minimum

(contrast/noise ratio), image lag, and burn in.There are several

thickness of the material for inspection; the thickness of Plate

additional factors and settings (for example, integration time,

1 plus Plate 2 matches the maximum thickness of the material

detector parameters or imaging software), which affect these

for inspection (see Fig. 2).

results. Additionally, calibration techniques may also have an

3.2.15 DDA offset image—image of the DDAin the absence

impact on the quality of the image. This practice delineates

of x-rays providing the background signal of all pixels.

tests for each of the properties listed herein and establishes

standard techniques for assuring repeatability throughout the

3.2.16 DDAgain image—image obtained with no structured

lifecycle testing of the DDA.

object in the x-ray beam to calibrate pixel response in a DDA.

3.2.17 calibration—correction applied for the offset signal

5. General Testing Procedures

and the non-uniformity of response of any or all of the X-ray

5.1 The tests performed herein can be completed either by

beam, scintillator, and the read out structure.

the use of the five-groove wedge phantom (see Fig. 1) or with

3.2.18 gray value—the numeric value of a pixel in the DDA

separate IQIs on the Duplex Plate Phantom (see Fig. 2).

image. This is typically interchangeable with the term pixel

value, detector response, Analog-to-Digital unit and detector 5.2 DDA Calibration Method—Prior to testing, the DDA

signal. shall be calibrated for offset and, or gain to generate corrected

E2737 − 10

FIG. 1 5-Groove-Wedge (steel) – see Appendix

images per manufacturer’s recommendation. It is important under similar conditions as in production. Some parameters to

that the calibration procedure be completed as would be done controlarelistedbelow.Ifseveraldifferentenergiesareusedin

in production during routine calibration procedures, and that production, the complete settings with the highest energy level

these same procedures be used throughout the periodic testing shall be used for these tests.

of the DDA after it is in-service. 5.3.1 X-ray tube voltage [kV]

5.3.2 tube current [mA]

5.3 Bad Pixel Standardization for DDAs—Images collected

5.3.3 focus detector distance (FDD) [mm]

for testing shall be corrected for bad pixels as would be done

5.3.4 object detector distance (ODD) [mm]

in production during routine bad pixel correction procedures

5.3.5 total exposure time per image [ms]

per manufacturer’s recommendation wherever required. A

5.3.6 detector corrections (calibration and bad pixel substi-

standardized nomenclature is presented in Practice E2597. The

tution)

identification and correction of bad pixels in a delivered DDA

5.3.7 detector settings

remain in the purview of agreement between the user and the

system manufacturer. The various tests shall be completed

5.3.8 image acquisition software and image processing

E2737 − 10

FIG. 2 Duplex Plate Phantom with IQIs positioned; one ASTM E1025 or E1742 Penetrameter on each plate and one ASTM E2002 Duplex

Wire IQI on the thinner plate. The boxes ROI 1 to ROI 4 are for evaluation of signal level and SNR.

6. Application of Baseline Tests and Test Methods zation in terms of the specific tests to perform, how the data is

presented, and the frequency of testing.This approach does the

6.1 DDA System Baseline Performance Tests

following:

6.1.1 The user shall accept the DDA system based on

manufacturer’s results of Practice E2597 on the specific 6.1.1.1 Provides a quantitative baseline of performance,

detector as provided in a data sheet for that serialized DDAor

6.1.1.2 provides results in a defined form that can be

other agreed to acceptance test between the user and manufac-

reviewed by the CEO and

turer(notcoveredinthispractice).TheuserbaselinestheDDA

6.1.1.3 offers a means to perform process checking of

using the tests defined in Table 1. Additional tests are to be

performance on a continuing basis.

defined in agreement between the CEO and the using organi-

E2737 − 10

TABLE 1 System Performance Tests and Process Check of the DDA System

System Performance Test System Performance Test Process Check

Base Software Tube Detector Short Long Usage Duplex

Line Update Change Change/ Version Version of Plate

Unit

Parameter Repair Five-Phantom

Hole

Wedge

Spatial Resolution SR µm x xxxx xx

Contrast Sensitivity CS % x x xxxx xx

Material Thickness Range MTR mm x x xxxx xx

Signal to Noise Ratio SNR x x xxxx xx

Signal Level SL x x x x x x x

Image Lag Lag % x x x

Burn In BI % x x x x x

Offset Level OL x x x x x

BadPixelDistribution x x xxxx

6.1.2 Acceptance values, and tolerances thereof obtained performance of the system. The time interval depends on the

from these tests shall also be in agreement between the CEO degree of usage of the system and shall be defined by the user

and the using organization. with consideration of the DDA system manufacturer’s infor-

6.1.3 Acceptance levels for individual bad pixels, bad mation.Theremaybetwoversionsofthelongversionstability

clusters, relevant bad clusters, and bad lines, and their statis- tests, the complete program and a short version. The intervals

tical distribution within the DDA, as well as proximity to said for the performance checks shall not exceed ten days. The

anomalies is to be determined by agreement between the user check for bad pixel shall be done daily. Details shall be agreed

and the CEO. The user and or CEO may refer to the Guide for upon between the customer and the user.

DDAs (E2736), Practice E2597, as well as consult with the

manufacturer on how the prevalence of these anomalous pixels

7. Apparatus

might impact a specific application. This practice does not set

7.1 The tests described in Table 1 and in Section 6 require

limits, but does offer a means for tracking such anomalous

the usage of either the five-groove wedge (see Fig. 1); or the

pixels in the table templates provided herein.

Duplex Plate Phantom with separate IQIs—E2002 Duplex

6.1.4 Given that the other elements of the DDA system are

Wire and proper E1025 or E1742 penetrameters (see Fig. 2).

withintheirtolerancesincl

...

Questions, Comments and Discussion

Ask us and Technical Secretary will try to provide an answer. You can facilitate discussion about the standard in here.